Part 1 of our privacy series covered what “privacy” entails, how privacy in blockchain networks differs from web2 privacy, and why it’s difficult to achieve in blockchains.

The main argument of this post is that if the desirable end-state is to have programmable privacy infrastructure that can handle shared private state without any single point of failure, then all roads lead to MPC. We also explore the maturity of MPC and its trust assumptions, highlight alternative approaches, compare tradeoffs, and provide an industry overview.

Are we all building the same thing? Keep reading to find out.

Thanks to Avishay (SodaLabs), Lukas (Taceo), Michael (Equilibrium), and Nico (Arcium) for the discussions that helped shape this post.

What Can Be, Unburdened By What Has Been

The existing privacy infrastructure in blockchains is designed to handle very specific use cases, such as private payments or voting. This is quite a narrow view and mostly reflects what blockchains are currently used for (trading, transfers, and speculation). As Tom Walpo put it - Crypto suffers from a Fermi Paradox:

Besides increasing individual freedom, we believe that privacy is a prerequisite for expanding the design space of blockchains beyond the current speculative meta. Many applications require some private state and/or hidden logic to function properly:

Hidden state: The vast majority of financial use cases require (at minimum) privacy from other users and many multiplayer games are significantly less fun to play without some hidden state (e.g. if everyone at the poker table were to see each other’s cards).

Hidden logic: Some use cases require hiding some logic while still enabling others to use the application, such as a matching engine, on-chain trading strategy, etc.

Empirical analysis (from both web2 and web3) shows most users aren’t willing to pay extra or jump through additional loops for added privacy, and we agree that privacy is not a selling point in itself. However, it does enable new and (hopefully) more meaningful use cases to exist on top of blockchains - allowing us to break out of the Fermi Paradox.

Privacy enhancing technologies (PETs) and modern cryptography solutions ("programmable cryptography") are the fundamental building blocks for realizing this vision (see appendix for more information about the different solutions available and their tradeoffs).

Three Hypotheses That Shape Our Views

Three key hypotheses shape our views of how we believe privacy infrastructure in blockchains might evolve:

The cryptography will be abstracted away from application developers: Most application developers don't want (or know how) to deal with the cryptography required for privacy. It’s unreasonable to expect them to implement cryptography of their own and build private app-specific chains (e.g. Zcash or Namada) or private applications on top of a public chain (e.g. Tornado Cash). This is simply too much complexity and overhead, which currently restricts the design space for most developers (can’t build applications that require some privacy guarantees). Due to this, we believe that the complexity of managing the cryptography part must be abstracted away from application developers. Two approaches here are programmable privacy infrastructure (a shared private L1/L2) or “confidentiality as a service” which enables outsourcing confidential compute.

Many use cases (probably more than we think) require a shared private state: As mentioned earlier, many applications require some hidden state and/or logic to function properly. Shared private state is a subset of this, where multiple parties compute over the same piece of private state. While we could trust a centralized party to do it for us and call it a day, we ideally want to do it in a trust-minimized manner to avoid single points of failure. Zero knowledge proofs (ZKPs) alone cannot achieve this - we need to leverage additional tools such as trusted execution environments (TEE), fully homomorphic encryption (FHE), and/or multi-party computation (MPC).

Larger shielded sets maximize privacy: Most information is revealed when entering or exiting the shielded set, so we should try to minimize that. Having multiple private applications built on top of the same blockchain can help strengthen privacy guarantees by increasing the number of users and transactions within the same shielded set.

End Game of Privacy Infrastructure

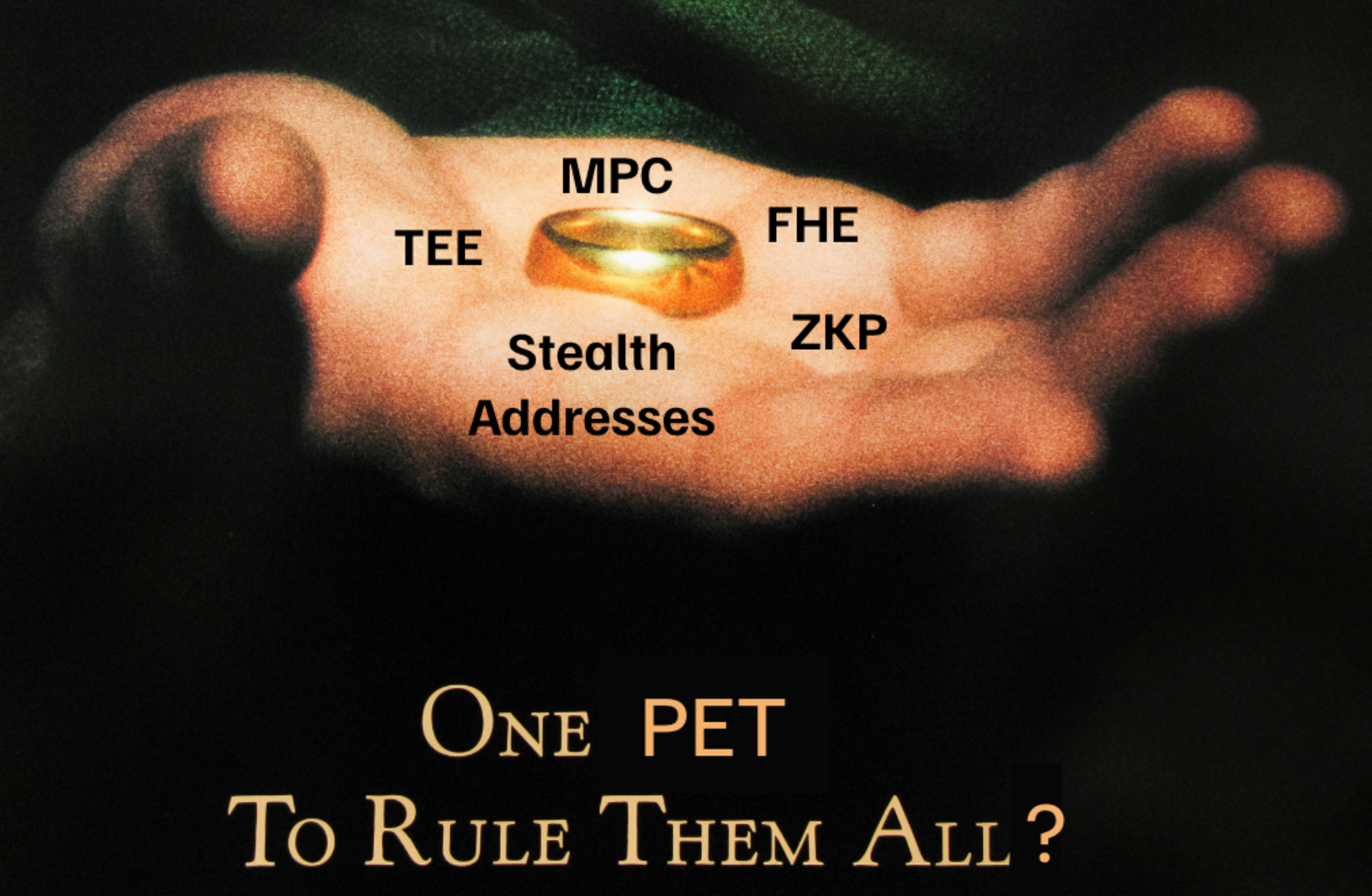

With the above hypotheses in mind - what is the end game for privacy infrastructure in blockchains? Is there one approach that is suitable for every application? One privacy enhancing technology to rule them all?

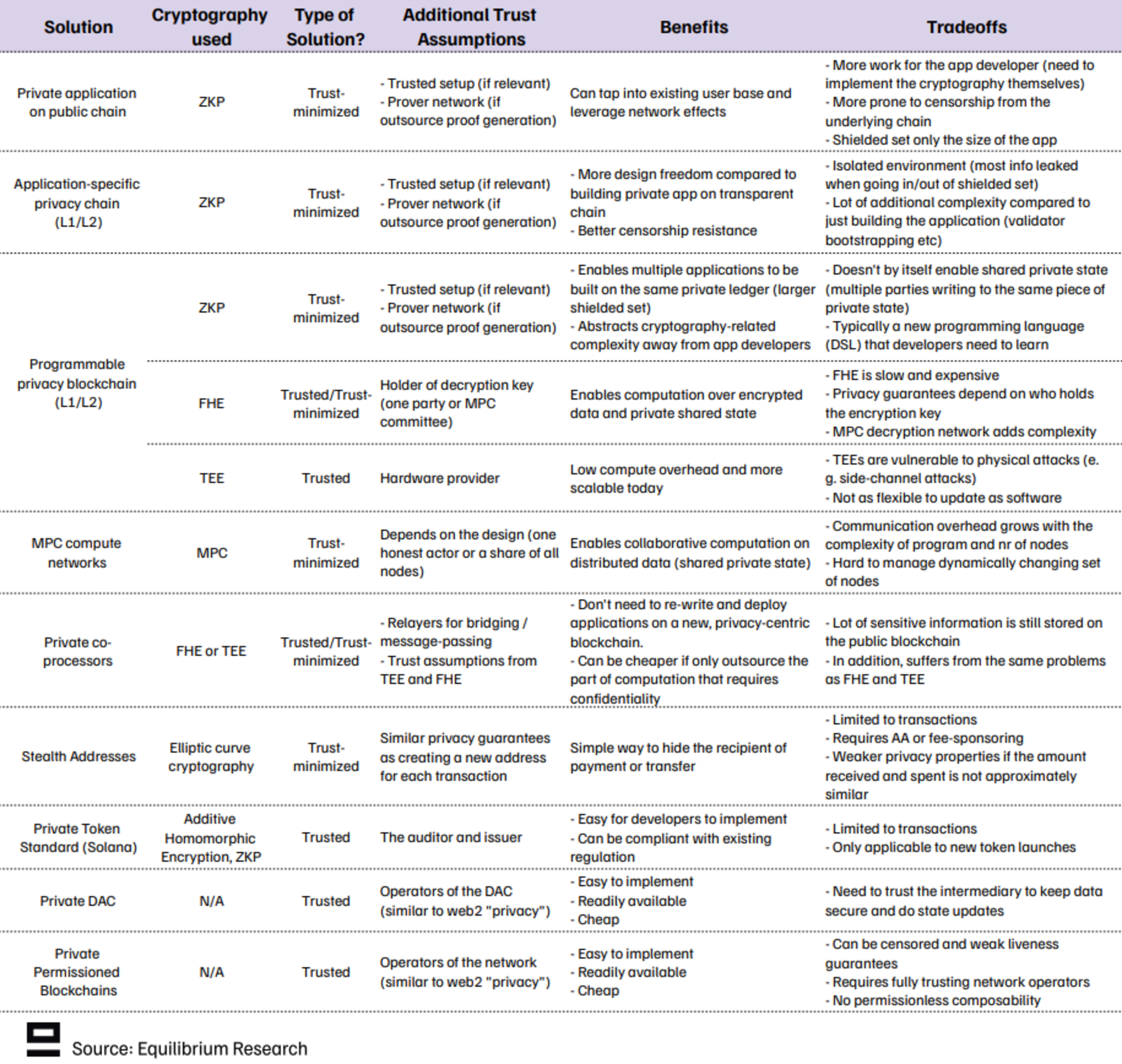

Not quite. All of these have different tradeoffs and we are already seeing them being combined in various ways. In total, we’ve identified 11 different approaches (see appendix).

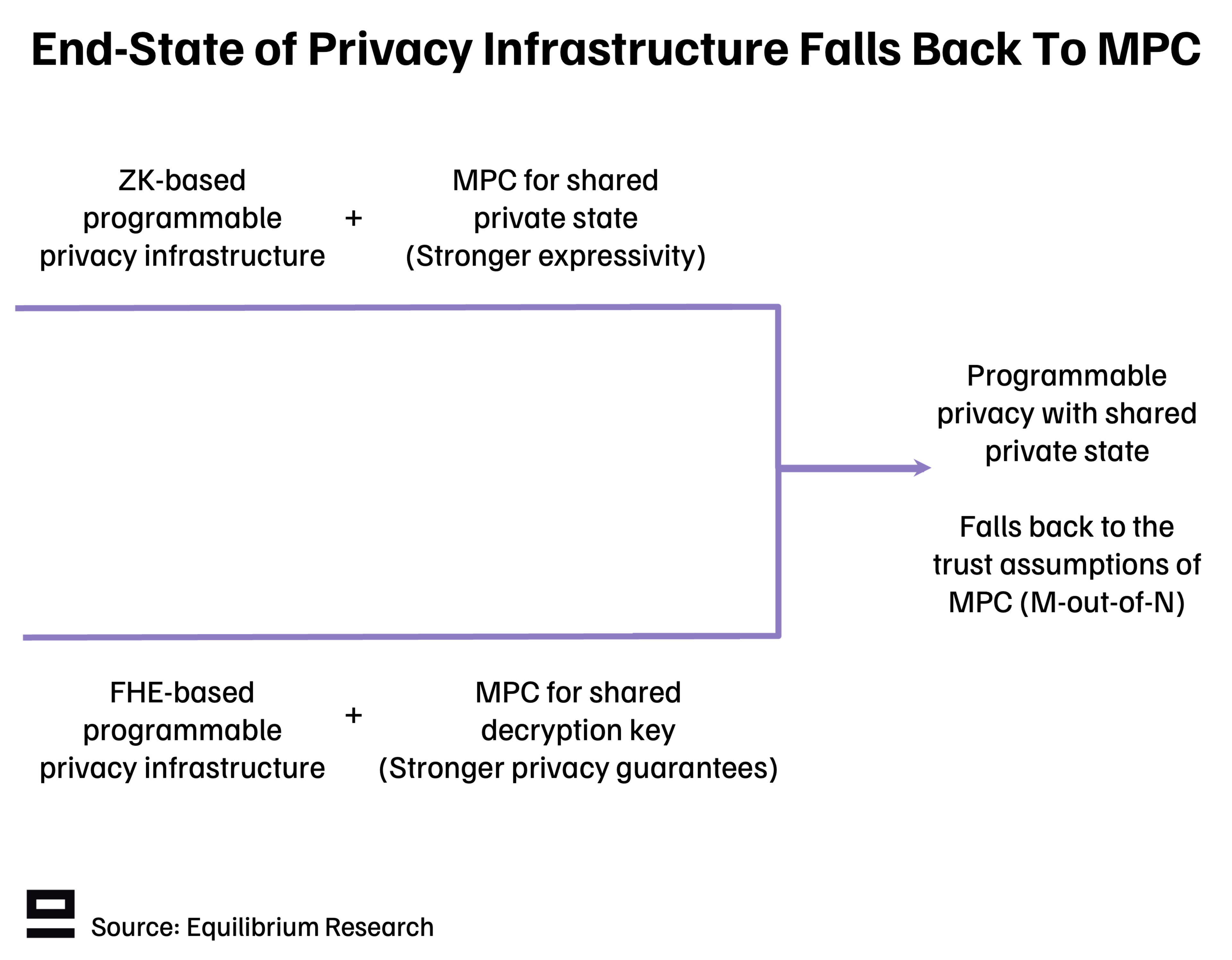

Today, the two most popular approaches to building privacy infrastructure in blockchains leverage either ZKPs or FHE. However, both have fundamental flaws:

ZK-based privacy protocols with client-side proving can offer strong privacy guarantees but don’t enable multiple parties to compute over the same private state. This limits expressivity, i.e. what types of applications developers can build.

FHE enables computation over encrypted data and shared private state, but raises the question of who owns that state, i.e. who holds the decryption key. This limits the strength of privacy guarantees and how much we can trust that what is private today remains so tomorrow.

If the desirable end-state is to have programmable privacy infrastructure that can handle shared private state without any single point of failure, then both roads lead to MPC:

Note that even though these two approaches are ultimately converging, MPC is leveraged for different things:

ZKP networks: MPC is used to add expressivity by enabling computation over a shared private state.

FHE networks: MPC is used to increase security and strengthen privacy guarantees by distributing the decryption key to an MPC committee (rather than a single third party).

While the discussion is starting to shift towards a more nuanced view, the guarantees behind these different approaches remain under-explored. Given that our trust assumptions boil down to those of MPC, the three key questions to ask are:

How strong are the privacy guarantees that MPC protocols can provide in blockchains?

Is the technology mature enough? If not, what are the bottlenecks?

Given the strength of the guarantees and the overhead it introduces, does it make sense compared to alternative approaches?

Let’s address these questions in more detail.

Analyzing Risks and Weaknesses with MPC

Whenever a solution uses FHE, one always needs to ask: "Who holds the decryption key?". If the answer is "the network", the follow-up question is: “Which real entities make up this network?”. The latter question is relevant to all use cases relying on MPC in some shape or form.

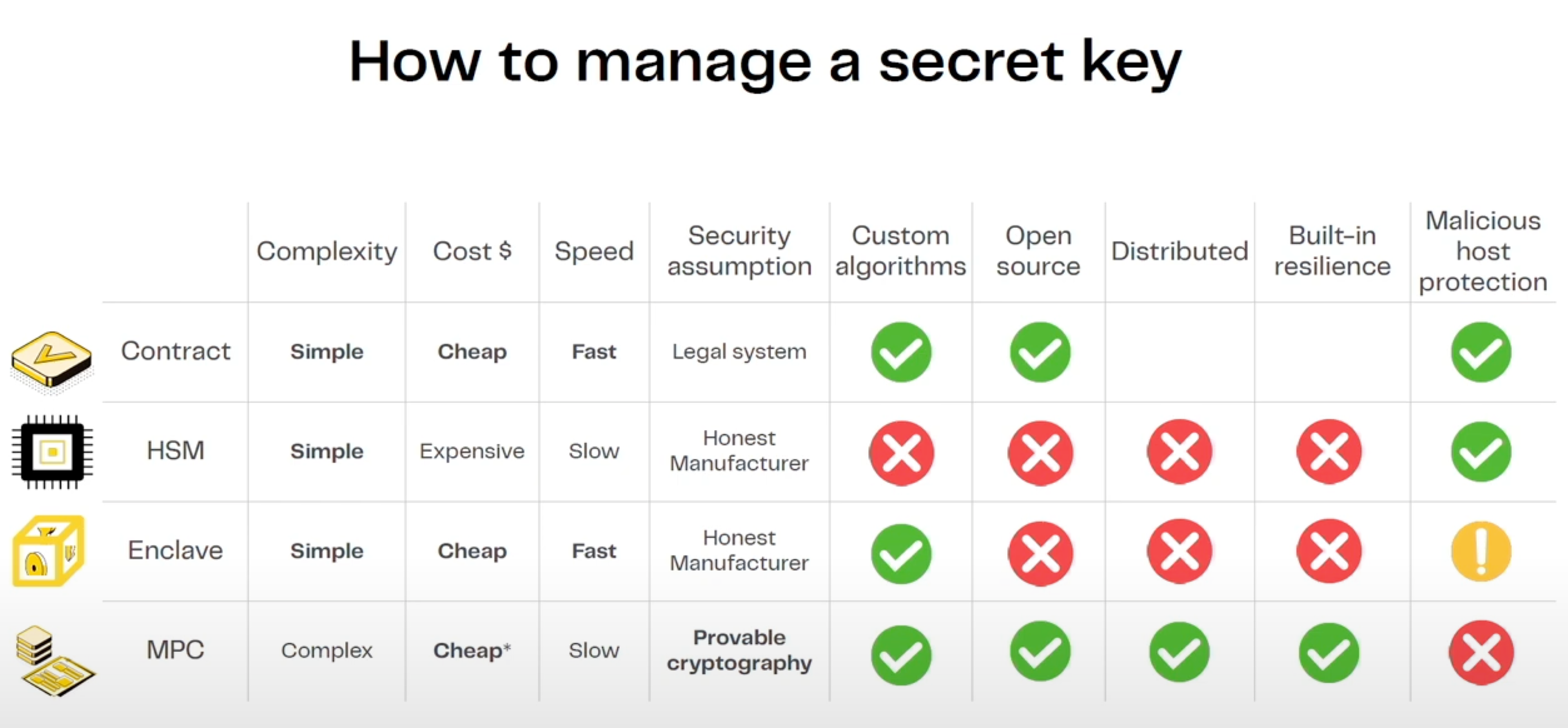

Source: Zama talk at ETH CC

The main risk with MPC is collusion, i.e. enough parties acting maliciously and colluding to decrypt data or misappropriate computation. Collusion can be agreed off-chain and is only revealed if the malicious parties do something so that it’s obvious (blackmailing, minting tokens out of thin air, etc). Needless to say, this has significant implications for the privacy guarantees that the system can provide. The collusion risk depends on:

What threshold of malicious parties can the protocol withstand?

Which parties make up the network and how trustworthy are they?

Number of different parties that participate in the network and their distribution - Are there any common attack vectors?

Is the network permissionless or permissioned (economic stake vs reputation/legal based)?

What is the punishment for malicious behavior? Is colluding the game theoretically optimal outcome?

1. How strong are the privacy guarantees that MPC protocols can provide in blockchains?

TLDR: Not as strong as we would like, but stronger than relying on just one centralized third party.

The threshold required to decrypt depends on the MPC scheme chosen - largely a tradeoff between liveness (“guaranteed output delivery”) and security. You can have an N/N scheme that is very secure but breaks down if just one node goes offline. On the other hand, N/2 or N/3 schemes are more robust but have a higher risk of collusion.

The two conditions to balance are:

The secret information is never leaked (e.g. the decryption key)

The secret information never disappears (even if say 1/3 of the parties suddenly leave).

The scheme chosen varies across implementations. For example, Zama is targeting N/3, whereas Arcium is currently implementing an N/N scheme but later aiming to also support schemes with higher liveness guarantees (and bigger trust assumptions).

One compromise along this tradeoff frontier would be to have a mixed solution:

High-trust committee that does the key handling with e.g. N/3 threshold.

Computation committees that are rotational and have e.g. N-1 threshold (or multiple different computation committees with different characteristics for users to choose from).

While this is appealing in theory, it also introduces additional complexity such as how the computation committee would interact with the high-trust-committee.

Another way to strengthen security guarantees is to run the MPC within trusted hardware so that key shares are kept inside a secure enclave. This makes it more difficult to extract or use the key shares for anything else than what is defined by the protocol. At least Zama and Arcium are exploring the TEE angle.

More nuanced risks include edge cases around things like social engineering, where for example a senior engineer is employed by all companies included in the MPC cluster over 10-15 years.

2. Is the technology mature enough? If not, what are the bottlenecks?

From a performance standpoint, the key challenge with MPC is the communication overhead. It grows with the complexity of computation and the number of nodes that are part of the network (more back-and-forth communication is required). For blockchain use cases, this leads to two practical implications:

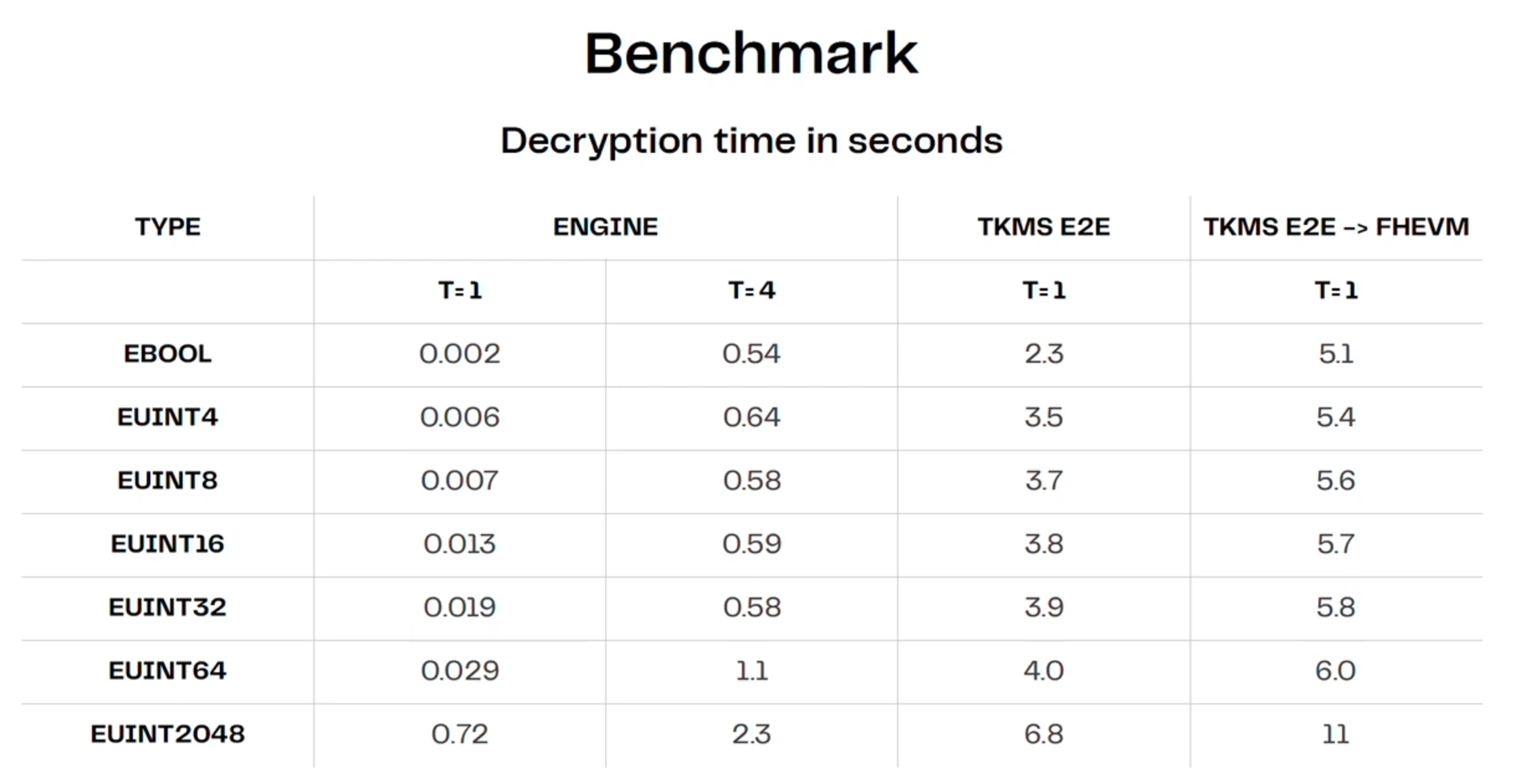

Small operator set: To keep communication overhead manageable, most existing protocols are currently restricted to small operator sets. For example, Zama’s decryption network currently allows for a maximum of 4 nodes (although they plan to expand to 16). Based on initial benchmarks published by Zama for their decryption network (TKMS), it takes 0.5-1s to decrypt even with just a 4-node cluster (full e2e flow takes much longer). Another example is Taceo's MPC implementation for Worldcoin's iris database, which has 3 parties (with a 2/3 honest party assumption).

Source: Zama talk at ETH CC

Permissioned operator set: In most cases, the operator set is permissioned. This means we rely on reputation and legal contracts rather than economic or cryptographic security. The main challenge with a permissionless operator set is that there is no way to know if people collude off-chain. In addition, it would require regular bootstrapping or redistribution of the key share for nodes to be able to dynamically enter/exit the network. While permissionless operator sets are the end goal and there is ongoing research on how to extend a PoS mechanism for a threshold MPC (for example by Zama), the permissioned route seems like the best way forward for now.

Alternative Approaches

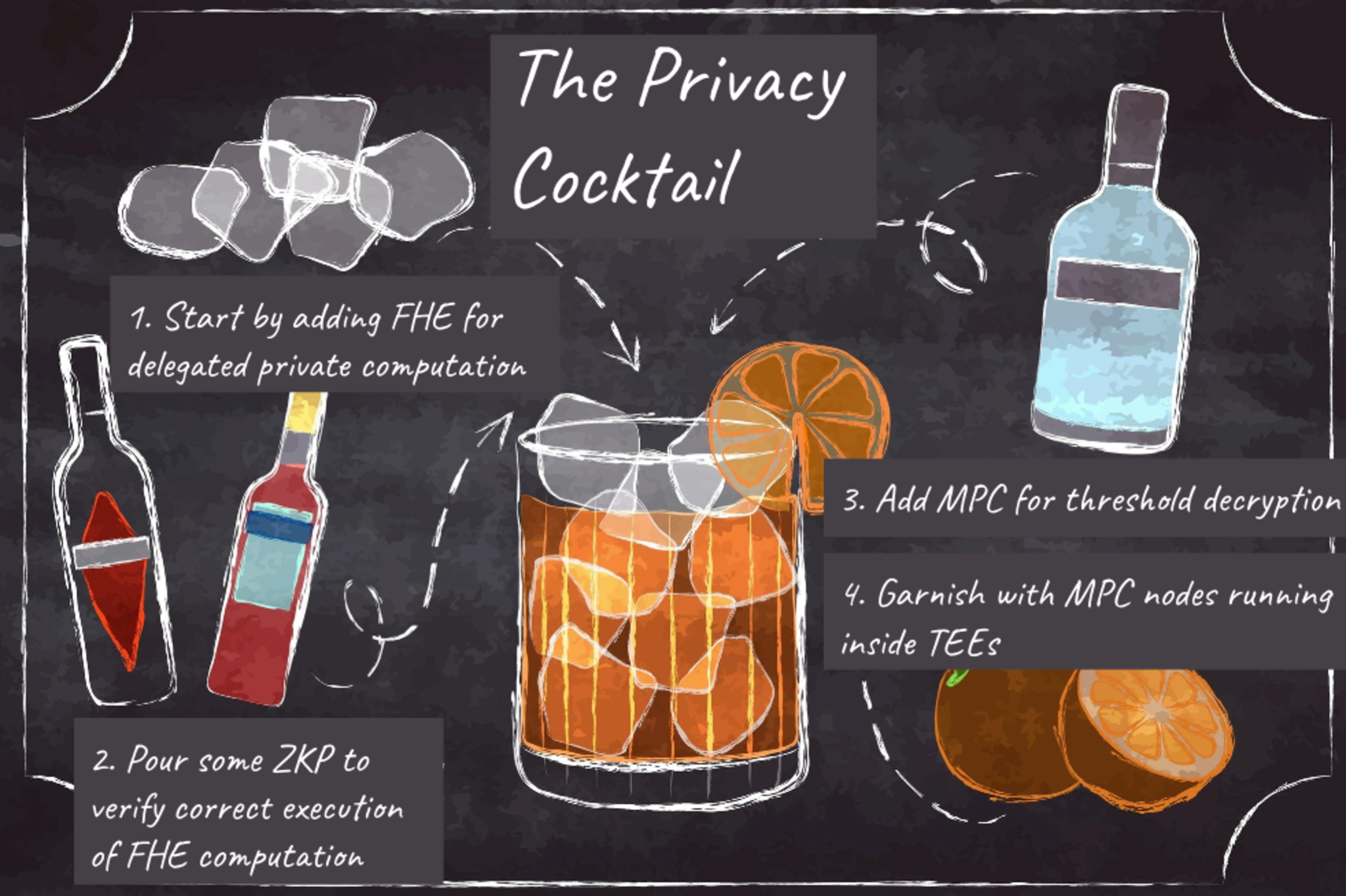

The full-blown privacy cocktail consists of:

FHE for delegated private computation

ZKP for verification that the FHE computation was correctly executed

MPC for threshold decryption

Each MPC node runs within a TEE for additional security

This is complex, introduces a lot of unexplored edge cases, has high overhead, and might not be practically feasible for many years to come. Another risk is the false sense of security one might get from adding multiple complex concepts on top of each other. The more complexity and trust assumptions we add, the more difficult it becomes to reason about the security of the overall solution.

Is it worth it? Maybe, but it’s also worth exploring alternative approaches that might bring significantly better computational efficiency at the expense of only slightly weaker privacy guarantees. As Lyron from Seismic noted - we should focus on the simplest solution that satisfies our criteria for the level of privacy required and acceptable tradeoffs, rather than over-engineering just for the sake of it.

1. Using MPC Directly For General Computation

If both ZK and FHE ultimately fall back to the trust assumptions of MPC, why not use MPC for computation directly? This is a valid question and something that teams such as Arcium, SodaLabs (used by Coti v2), Taceo, and Nillion are attempting to do. Note that MPC takes many forms, but of the three main approaches, we are here referring to secret sharing and garbled circuit (GC) based protocols, not FHE-based protocols that use MPC for decryption.

While MPC is already used for simple computations such as distributed signatures and more secure wallets, the main challenge with using MPC for more general computation is the communication overhead (grows with both the complexity of computation and the number of nodes involved).

There are some ways to reduce the overhead, such as by doing the pre-processing (i.e. the most expensive parts of the protocol) in advance and offline - something both Arcium and SodaLabs are exploring. The computation is then executed in the online phase, which consumes some of the data produced in the offline phase. This significantly reduces the overall communication overhead.

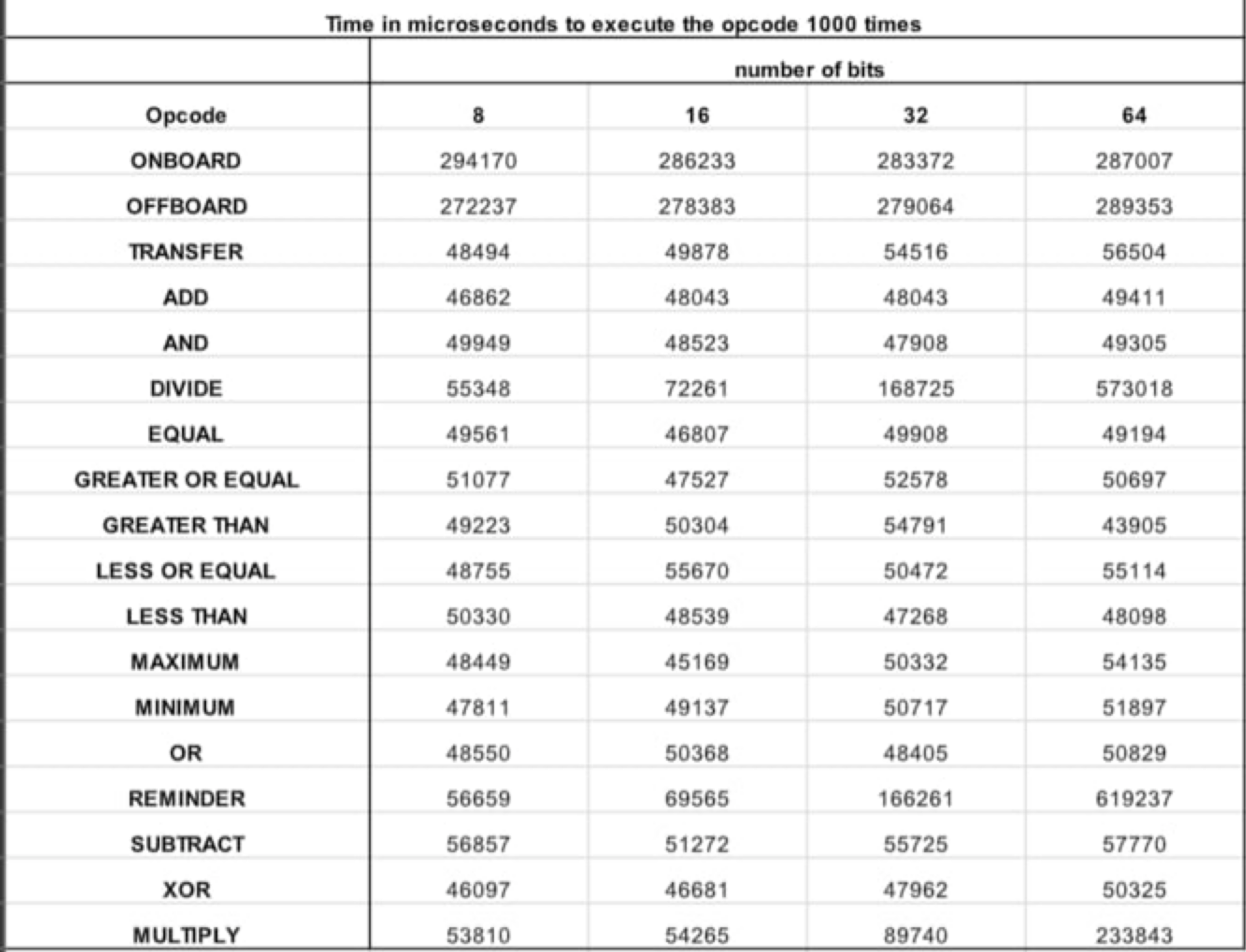

The table below by SodaLabs shows initial benchmarks on how long it takes to execute different opcodes 1,000 times in their gcEVM (noted in microseconds). While this is a step in the right direction, a lot of work remains to improve efficiency and expand the operator set beyond a few nodes.

Source: SodaLabs

The benefit of the ZK-based approach is that you only use MPC for use cases that require computation over a shared private state. FHE competes more directly with MPC and heavily relies on hardware acceleration.

2. Trusted Execution Environments

There’s recently been a revived interest in TEEs, which can be leveraged either in isolation (TEE-based private blockchains or co-processors) or in combination with other PETs such as ZK-based solutions (only use TEE for computation over shared private state).

While TEEs are in some ways more mature and introduce less performance overhead, they don’t come without downsides. Firstly, TEEs have different trust assumptions (1/N) and offer a hardware-based solution rather than software. An often-heard criticism is around past vulnerabilities of SGX, but it’s worth noting that TEE ≠ Intel SGX. TEEs also require trusting the hardware provider and the hardware is expensive (not accessible to most). One solution to the risk of physical attacks could be to run TEEs in space for mission-critical things.

Overall, TEEs seem more suitable for attestation or use cases that only need short-term privacy (threshold decryption, dark order books, etc). For permanent or long-term privacy, the security guarantees seem less attractive.

3. Private DAC and other approaches relying on trusted 3rd parties for privacy

Intermediated privacy can offer privacy from other users, but the privacy guarantees come solely from trusting a third party (single point of failure). While it resembles “web2 privacy” (privacy from other users), it can be strengthened with additional guarantees (cryptographic or economic) and allow verification of correct execution.

Private data availability committees (DAC) are one example of this; Members of the DAC store data off-chain and users trust them to correctly store data and enforce state transition updates. Another flavor of this is the Franchised Sequencer proposed by Tom Walpo.

While this approach makes large tradeoffs in the privacy guarantees, it might be the only feasible alternative for lower-value, high-performance applications in terms of cost and performance (for now at least). An example is Lens Protocol, which plans to use a private DAC to achieve private feeds. For use cases such as on-chain social, the tradeoff between privacy and cost/performance is probably reasonable for now (given the cost and overhead of alternatives).

4. Stealth Addresses

Stealth addresses can provide similar privacy guarantees as creating a new address for each transaction, but the process is automated on the backend and abstracted away from users. For more information, see this overview by Vitalik or this deep dive into different approaches. The main players in this field include Umbra and Fluidkey.

While stealth addresses offer a relatively simple solution, the main drawback is that they can only add privacy guarantees for transactions (payments and transfers), not general-purpose computation. This sets them apart from the other three solutions mentioned above.

In addition, the privacy guarantees stealth addresses provide are not as strong as alternatives. The anonymity can be broken with simple clustering analysis, particularly if the incoming and outgoing transfers are not in a similar range (e.g. receive $10,000, but spend on average $10-100 on everyday transactions). Another challenge with stealth addresses is upgrading keys, which today needs to be done individually for each wallet (key store rollups could help with this problem). From the UX side, stealth address protocols also require account abstraction or a paymaster to cover fees if the account doesn’t have the fee token (e.g. ETH).

Risks To Our Thesis

Given the fast pace of development and general uncertainty around different technical solutions, there are several risks to our thesis of MPC being the end game. The main reasons why we may not end up needing MPC in one shape or form include:

Shared private state isn’t as important as we make it out to be: In that case, ZK-based infrastructure is better poised to win as it has stronger privacy guarantees and lower overhead than FHE. There are already use cases where ZK-based systems work well for isolated use cases, such as the private payments protocol Payy.

The tradeoff in performance is not worth the benefit in privacy guarantees: One could argue that the trust assumptions of an MPC network with 2-3 permissioned parties are not meaningfully different from a single centralized player and that the significant increase in cost/overhead is not worth it. This may be true for many application, particularly low-value, cost-sensitive ones (e.g. social or gaming). However, there are also many high-value use cases where collaboration is currently very expensive (or impossible) due to legal issues or large coordination friction. The latter is where MPC and FHE-based solutions can shine.

Specialization wins out over general-purpose design: Building a new chain and bootstrapping a community of users and developers is hard. Due to this, general-purpose privacy infrastructure (L1/L2s) may struggle to get traction. Another question concerns specialization; it’s very difficult for a single protocol design to cover the full trade-off space. In this world, solutions that provide privacy for existing ecosystems (confidentiality as a service) or specialized use cases (e.g. for payments) would prevail. We are skeptical about the latter though due to the complexity it introduces to application developers that would need to implement some cryptography themselves (rather than it being abstracted away).

Regulation continues to hinder experimentation around privacy solutions: This is a risk for anyone building both privacy infrastructure and applications with some privacy guarantees. Non-financial use cases face less regulatory risk, but it’s hard (impossible) to control what gets built on top of permissionless privacy infrastructure. We may well solve the technical problems before the regulatory ones.

The overhead of MPC and FHE-based schemes remains too high for most use cases: While MPC suffers mostly from communication overhead, FHE teams are heavily relying on hardware acceleration to improve their performance. However, if we can extrapolate the evolution of specialized hardware on the ZK side, it will take much longer than most would want before we get production-ready FHE hardware. Examples of teams working on FHE hardware acceleration include Optalysys, fhela, and Niobium.

Summary

Ultimately, a chain is only as strong as its weakest link. In the case of programmable privacy infrastructure, the trust guarantees boil down to those of MPC if we want it to be able to handle shared private state without a single point of failure.

While this piece may sound critical towards MPC, it is not. MPC offers a huge improvement to the current status quo of relying on centralized third parties. The main problem, in our view, is the false sense of confidence across the industry and issues being swept under the rug. Instead, we should deal with issues head-on and focus on evaluating potential risks.

Not all problems, however, need to be solved using the same tools. Even though we believe that MPC is the end game, alternative approaches are viable options as long as the overhead for MPC-powered solutions remains high. It's always worth considering which approach best fits the specific needs/characteristics of the problems we are trying to solve and what tradeoffs we are willing to make.

Even if you have the best hammer in the world, not everything is a nail.

Appendix 1: Different Approaches To Privacy In Blockchains

Privacy enhancing technologies, or PETs, aim to improve one or more aspects of the above. More concretely, they are technical solutions to protect data during storage, computing, and communication.

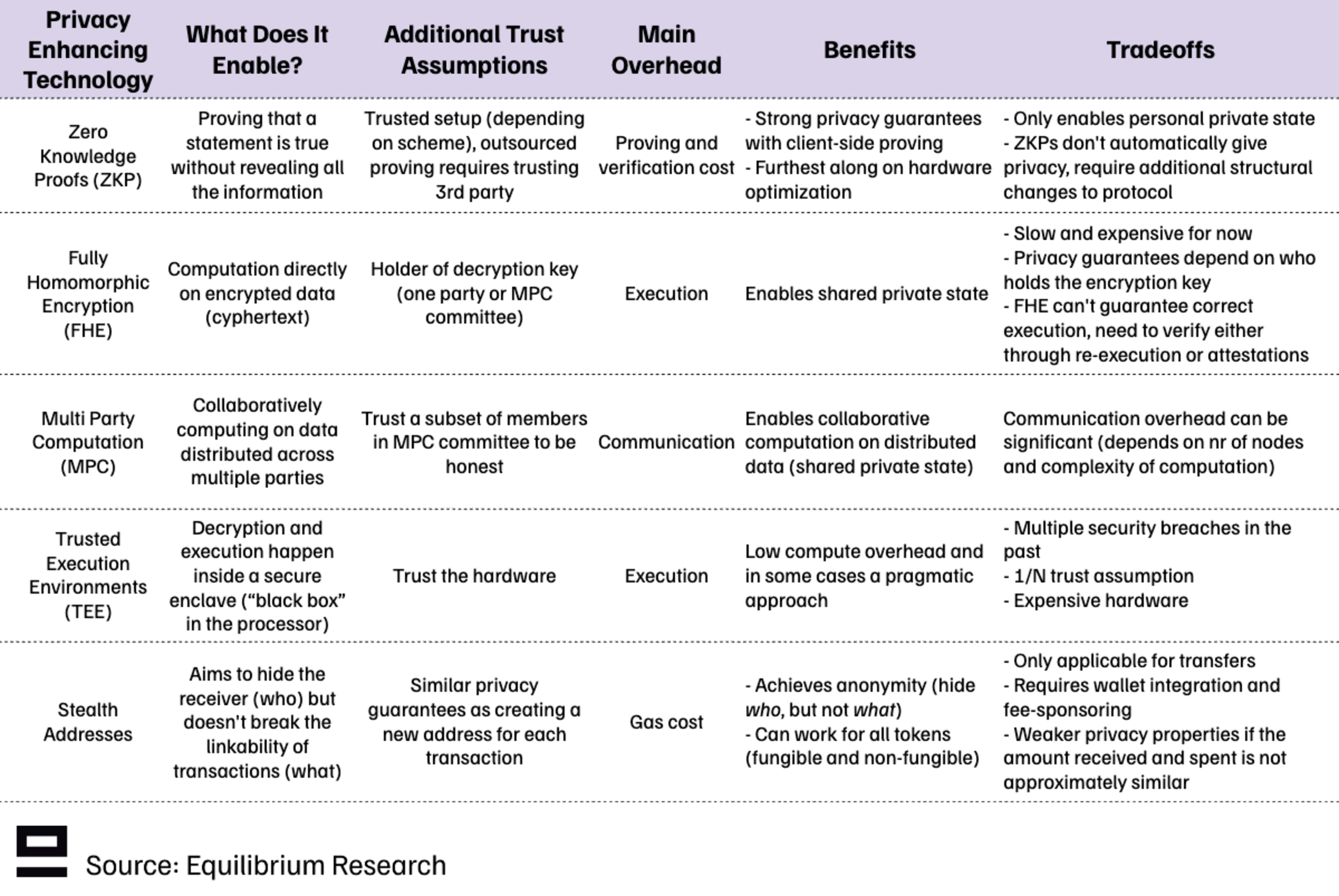

There are plenty of different PETs to choose from, but the most relevant ones for the blockchain industry include the three-letter soup - ZKP, MPC, FHE, and TEE - along with additional methods such as stealth addresses:

These PETs can be combined in various ways to achieve different tradeoffs and trust assumptions. We also have solutions that rely on a trusted third party (intermediated privacy), such as private data availability committees (DAC). These can enable privacy from other users, but the privacy guarantees come solely from trusting a third party. In this sense, it resembles “web2 privacy” (privacy from other users), but it can be strengthened with additional guarantees (cryptographic or economic).

In total, we’ve identified 11 different approaches to achieving some privacy guarantees in blockchain networks. Some of the tradeoffs observed include:

Trusted vs Trust-minimized privacy (Is there a single point of failure?)

Hardware vs Software approach

Isolated instances vs combination of multiple PETs

L1 vs L2

New chain vs Add-on privacy to existing chains (“confidentiality as a service”)

Size of shielded set (Multi-chain > Single-chain > Application > Single asset)

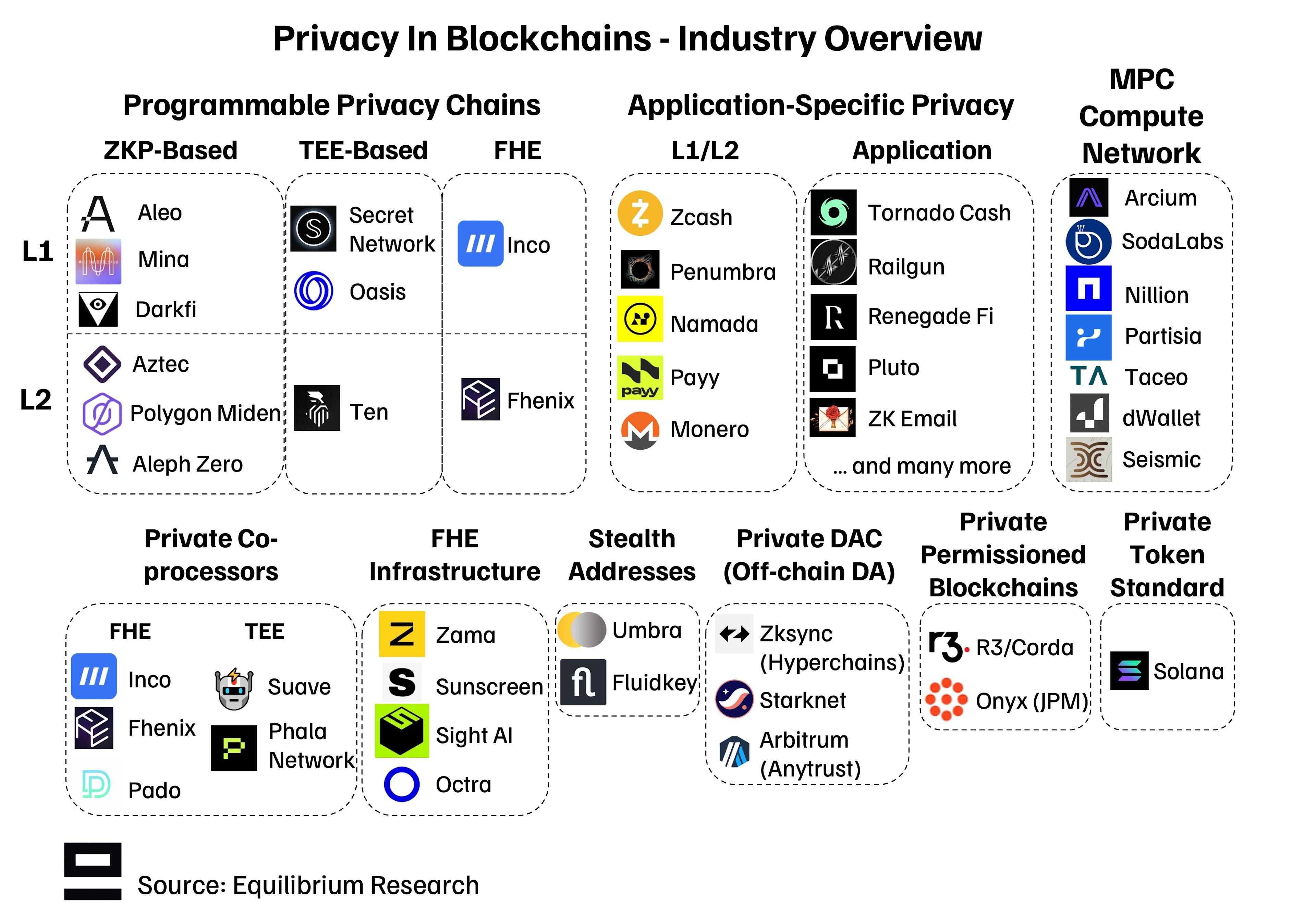

Appendix 2: Industry Overview

Within these 11 categories, many different companies are working on one or more solutions. Below is a (non-exhaustive) overview of the current state of the industry:

Continue reading

May 28, 2025

State of Verifiable Inference & Future Directions

Verifiable inference enables proving the correct model and weights were used, and that inputs/outputs were not tampered with. This post covers different approaches to achieve verifiable inference, teams working on this problem, and future directions.

March 25, 2025

Introducing Our Entrepreneur in Residence (EIR) Program

After 6+ years of building core blockchain infrastructure across most ecosystems and incubating ventures like ZkCloud, we're looking for ambitious pre-founders with whom to collaborate closely.

March 10, 2025

From Speculation to Utility: Next Steps For Onchain Lending Markets

Despite its promises, onchain lending still mostly caters to crypto-natives and provides little utility besides speculation. This post explores a path to gradually move to more productive use cases, low-hanging fruit, and challenges we might face.

February 18, 2025

Can Blockchains And Cryptography Solve The Authenticity Challenge?

As gen-AI models improve, it's becoming increasingly difficult to differentiate between AI- and human-generated content. This piece dives into whether cryptography and blockchains can solve the authenticity challenge and help restore trust on the Internet

February 6, 2025

Vertical Integration for both Ethereum and ETH the Asset

In recent months, lackadaisical price action and usage growing on other L1/L2s has driven a discussion on what Ethereum’s role and the value of ETH, the asset is long-term.

January 29, 2025

Equilibrium: Building and Funding Core Infrastructure For The Decentralized Web

Combining Labs (our R&D studio) and Ventures (our early-stage venture fund) under one unified brand, Equilibrium, enables us to provide more comprehensive support to early-stage builders and double down on our core mission of building the decentralized web

November 28, 2024

20 Predictions For 2025

For the first time, we are publishing our annual predictions for what will happen by the end of next year and where the industry is headed. Joint work between the two arms of Equilibrium - Labs and Ventures.

November 7, 2024

9 + 1 Open Problems In The Privacy Space

In the third (and final) part of our privacy series, we explore nine open engineering problems in the blockchain privacy space in addition to touching on the social/regulatory challenges.

October 15, 2024

Aleo Mainnet Launch: Reflecting On The Journey So Far, Our Contributions And Path Ahead

Equilibrium started working with Aleo back in 2020 when ZKPs were still mostly a theoretical concept and programmable privacy in blockchains was in its infancy. Following Aleo's mainnet launch, we reflect on our journey and highlight key contributions.

June 12, 2024

What Do We Actually Mean When We Talk About Privacy In Blockchain Networks (And Why Is It Hard To Achieve)?

An attempt to define what we mean by privacy, exploring how and why privacy in blockchain networks differs from web2, and why it's more difficult to achieve. We also provide a framework to evaluate different approaches for achieveing privacy in blockchain.

April 9, 2024

Will ZK Eat The Modular Stack?

Modularity enables faster experimentation along the tradeoff-frontier, wheras ZK provides stronger guarantees. While both of these are interesting to study on their own, this post explores the cross-over between the two.

October 5, 2023

Overview of Privacy Blockchains & Deep Dive Of Aleo

Programmable privacy in blockchains is an emergent theme. This post covers what privacy in blockchains entail, why most blockchains today are still transparent and more. We also provide a deepdive into Aleo - one of the pioneers of programmable privacy!

March 12, 2023

2022 Year In Review

If you’re reading this, you already know that 2022 was a tumultuous year for the blockchain industry, and we see little value in rehashing it. But you probably also agree with us that despite many challenges, there’s been a tremendous amount of progress.

May 31, 2022

Testing the Zcash Network

In early March of 2021, a small team from Equilibrium Labs applied for a grant to build a network test suite for Zcash nodes we named Ziggurat.

June 30, 2021

Connecting Rust and IPFS

A Rust implementation of the InterPlanetary FileSystem for high performance or resource constrained environments. Includes a blockstore, a libp2p integration which includes DHT contentdiscovery and pubsub support, and HTTP API bindings.

June 13, 2021

Rebranding Equilibrium

A look back at how we put together the Equilibrium 2.0 brand over four months in 2021 and found ourselves in brutalist digital zen gardens.

January 20, 2021

2021 Year In Review

It's been quite a year in the blockchain sphere. It's also been quite a year for Equilibrium and I thought I'd recap everything that has happened in the company with a "Year In Review" post.