Thanks to Dino (Fluent/Modular Media), Lisa (Aztec), and the team at Celestia for review and feedback! In addition, there were many early discussions with Joakim, Olli & Vesa-Ville (Equilibrium Labs) and Mika & Chris (Equilibrium Ventures) that helped shape this piece.

To talk about anything in the piece, you can reach out to Hannes on X or by email.

What Are Blockchains and How Do They Extend The Current Computing Paradigm?

Blockchain (noun): A coordination machine that enables participants from around the world to collaborate along a set of commonly agreed rules without any third party facilitating it.

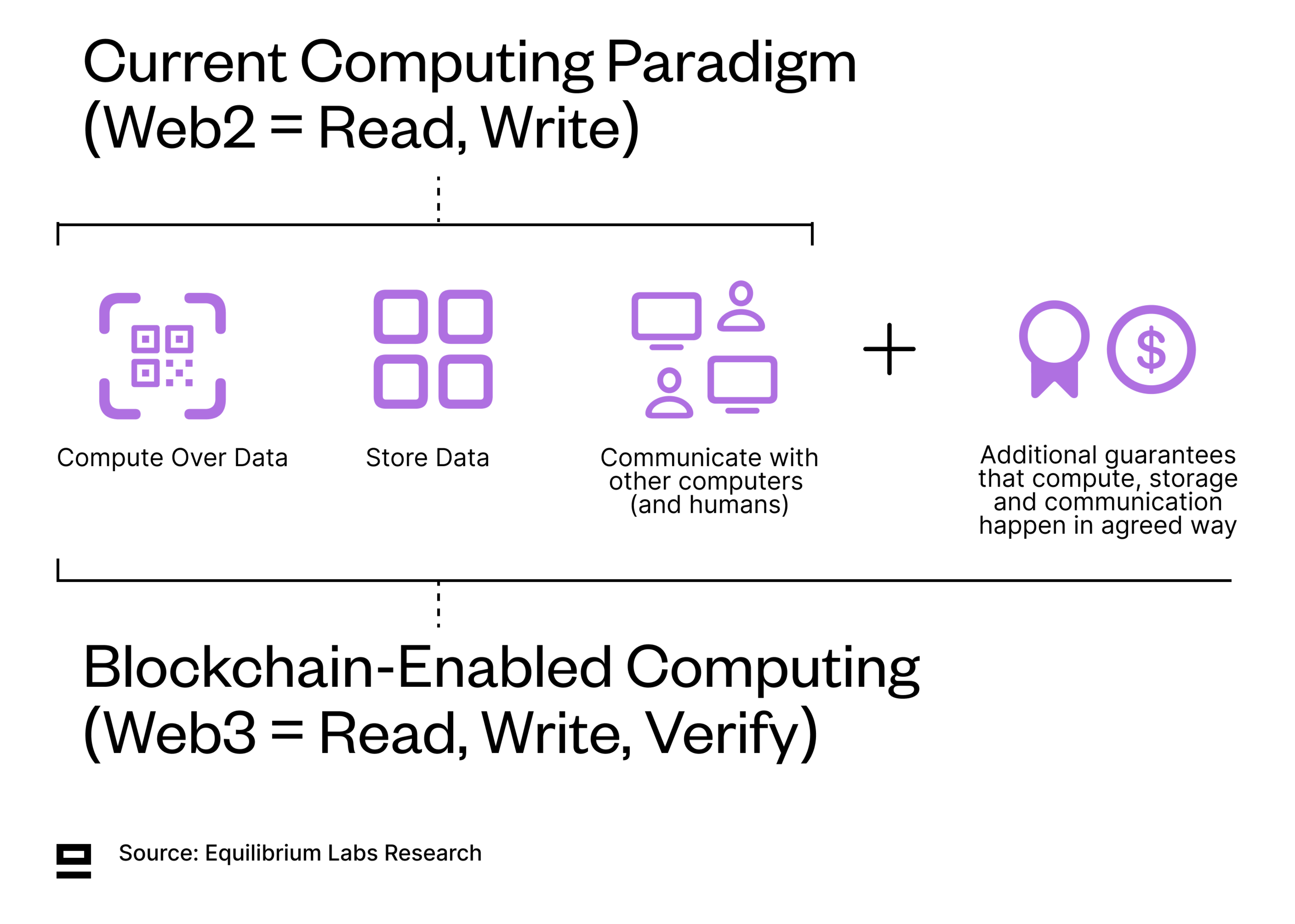

Computers are designed to do three things: store data, compute, and communicate with each other and humans. Blockchains add a fourth dimension: additional guarantees that these three things (storage, computation, and communication) happen in an agreed way. These guarantees enable cooperation between strangers without a trusted third party to facilitate it (decentralized).

These additional guarantees can be either economic (trust game theory and incentives/disincentives) or cryptographic (trust math), but most applications utilize a combination of the two - cryptoeconomic. This acts as a stark contrast to the current status quo of largely reputation-based systems.

While Web3 is often described as “read, write, own”, we believe a better notion for the third iteration of the internet is “read, write, verify” given that the key benefit of public blockchains is guaranteed computation and easy verification that these guarantees were honored. Ownership can be a subset of guaranteed computation if we build digital artifacts that can be bought, sold, and controlled. However, many use cases of blockchains benefit from guaranteed computation but don’t directly involve ownership. For example, if your health in a fully on-chain game is 77/100 - do you own that health or is it just enforceable on-chain according to commonly agreed-upon rules? We would argue the latter, but Chris Dixon might disagree.

Web3 = Read, Write, Verify

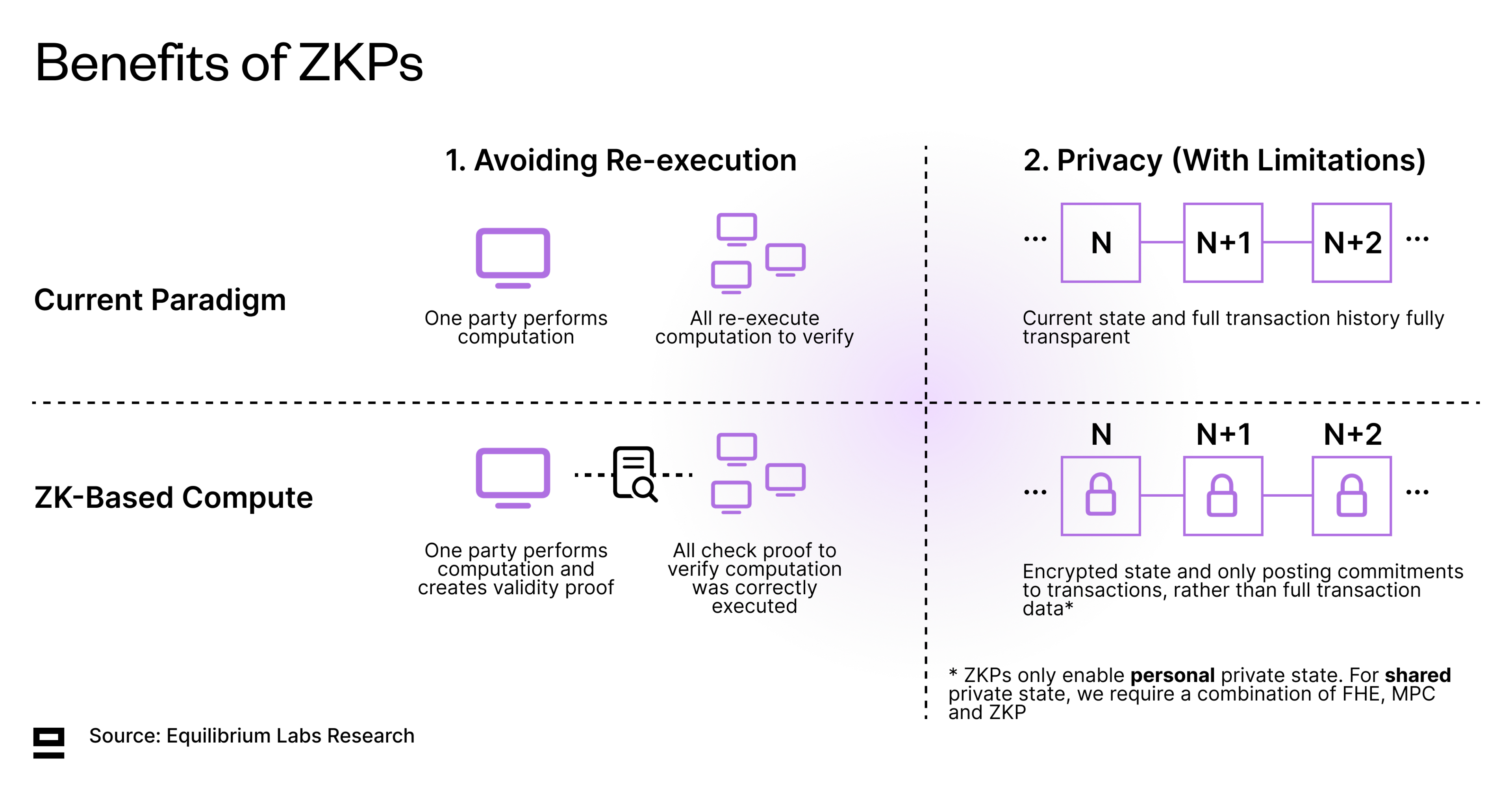

ZK and Modularity - Two Trends That Will Accelerate

Blockchains offer a lot to be excited about, but the decentralized model also adds overhead and inefficiency through additional functions such as P2P messaging and consensus. In addition, most blockchains still validate correct state transitions by re-execution, meaning that each node on the network has to re-execute transactions to verify the correctness of the proposed state transition. This is wasteful and in stark contrast to the centralized model where only one entity executes. While a decentralized system always contains some overhead and replication, the aim should be to get asymptotically closer to a centralized benchmark in terms of efficiency.

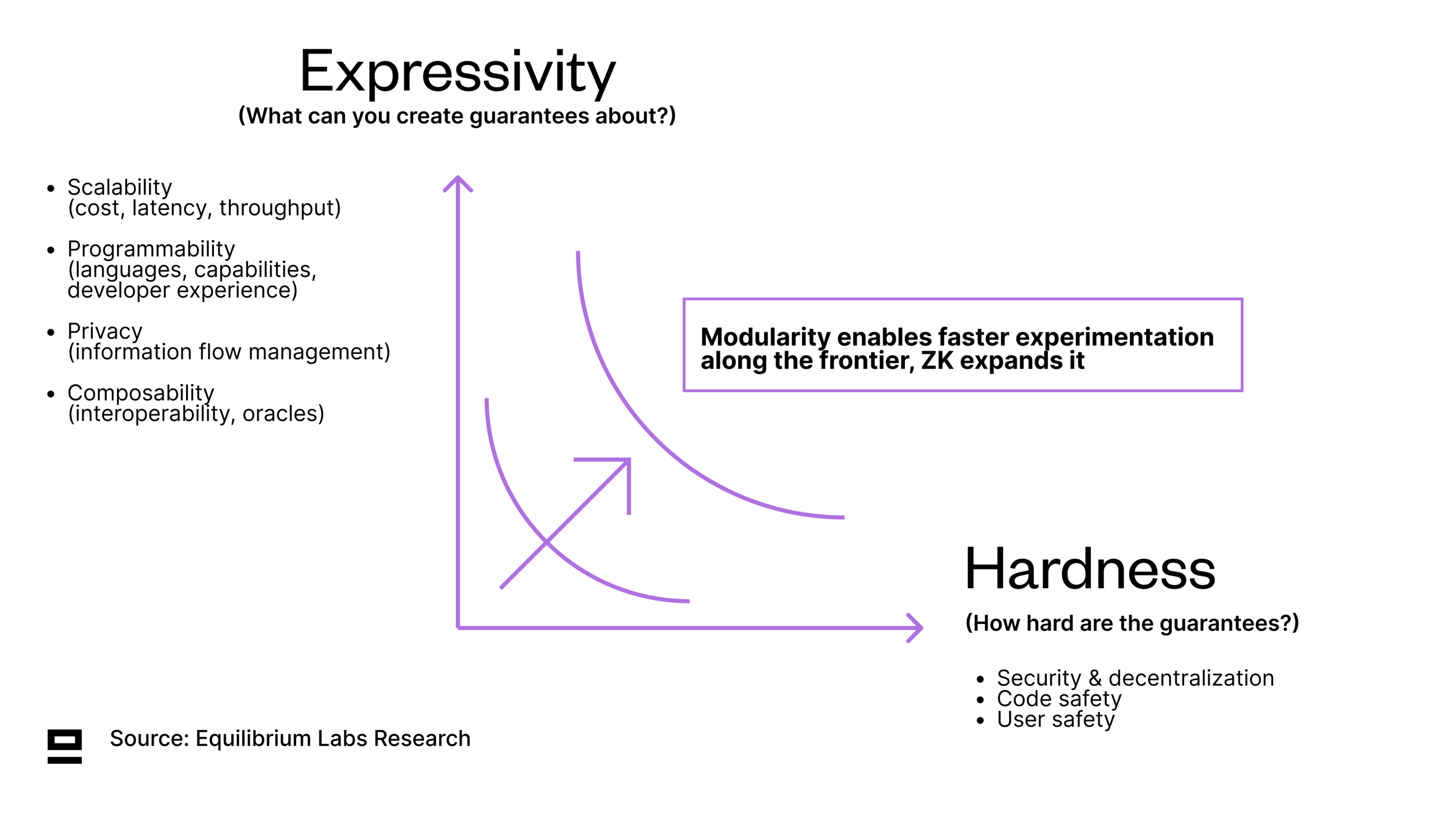

Even though the underlying infrastructure has improved significantly over the last decade, there is a lot of work remaining before blockchains can handle internet-level scale. We see tradeoffs along two main axes - expressivity and hardness - and believe modularity enables faster experimentation along the tradeoff-frontier while ZK expands it:

Expressivity - What can you create guarantees about? Contains scalability (cost, latency, throughput, etc), privacy (or information flow management), programmability, and composability.

Hardness - How hard are these guarantees? Contains security, decentralization, and user & code safety.

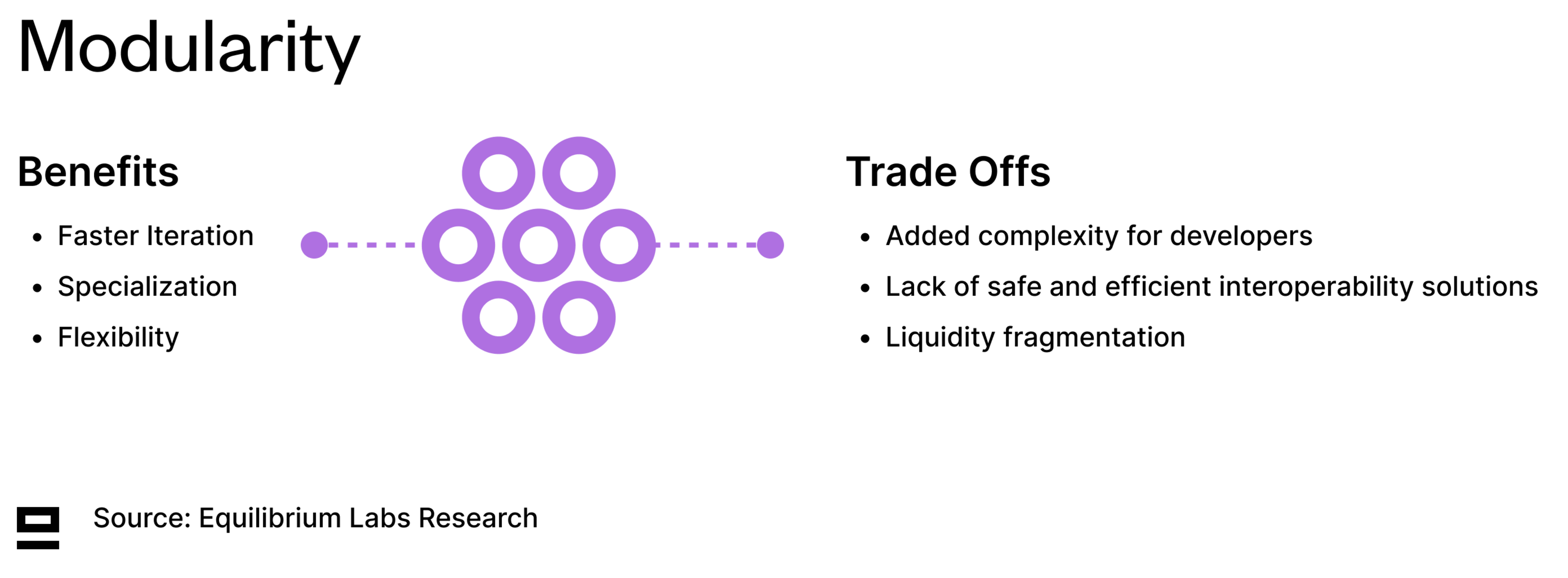

Modularity is the degree to which a system’s components can be separated and recombined. Through faster feedback loops and lower barriers to entry with less capital required (both economic and human) - modularity enables faster experimentation and specialization. The question of modular vs integrated is not binary, but rather a spectrum to experiment along to figure out which parts make sense to decouple, and which don’t.

Zero Knowledge Proofs, or ZKPs, on the other hand, enable one party (the prover) to prove to another party (the verifier) that they know something to be true, without revealing any additional information beyond its validity. This can increase scalability and efficiency by avoiding re-execution (moving from a model of all execute to verify, to a model of one executes, all verify), as well as expressivity by enabling privacy (with limitations). ZKPs also improve the hardness of guarantees by replacing weaker cryptoeconomic guarantees with stronger ones, which is represented by pushing the tradeoff frontier outwards (referencing the chart above).

We believe both modularity and "ZKfication of everything" are trends that will continue to accelerate. While both provide interesting lenses through which to explore the space individually - we are particularly interested in the cross-over of the two. The two key questions that we’re interested in are:

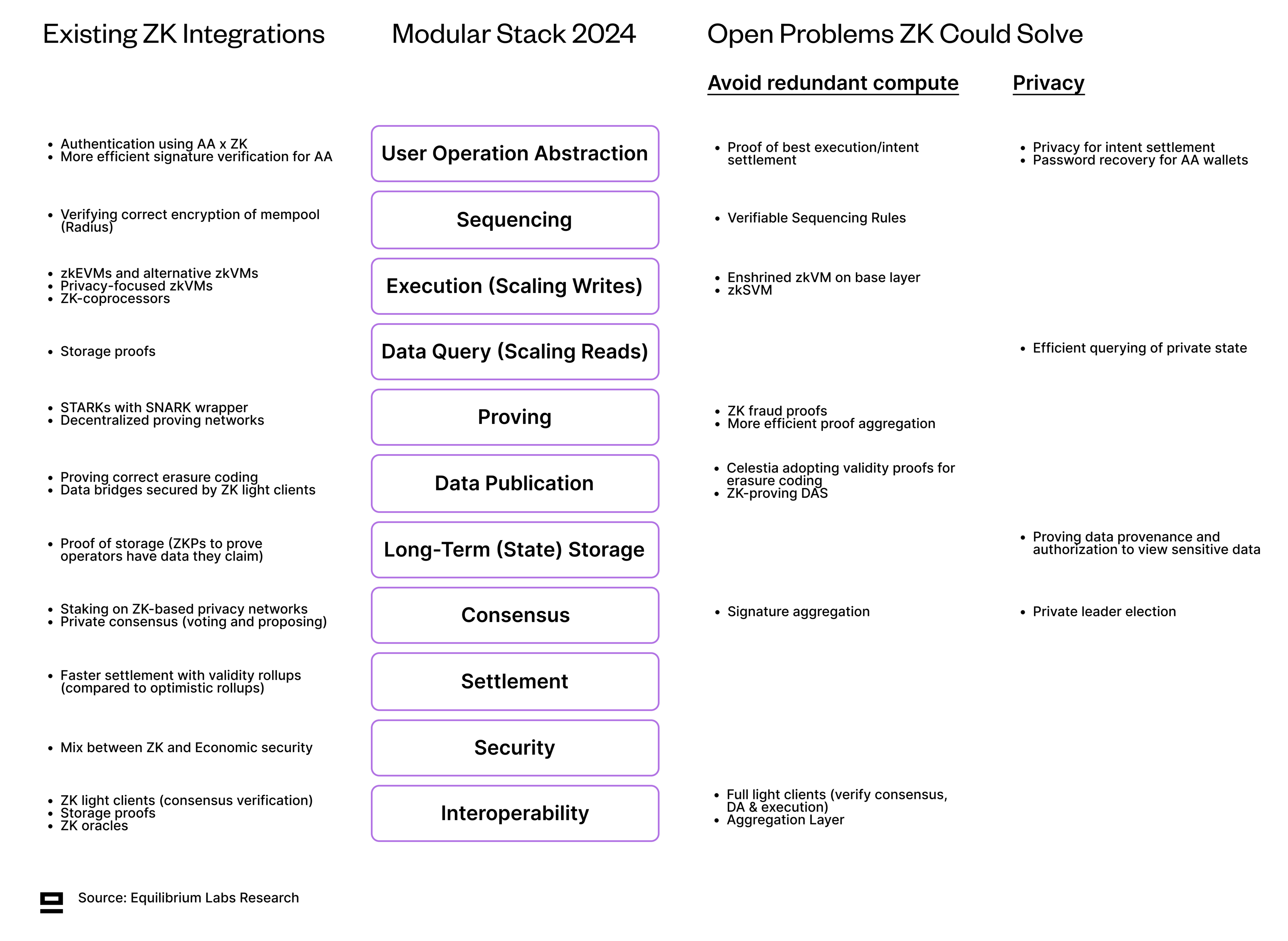

Which parts of the modular stack have already incorporated ZKPs and which are yet to be explored?

What problems could be alleviated with ZKPs?

However, before we can get into those questions, we need an updated view of what the modular stack looks like in 2024.

Modular Stack in 2024

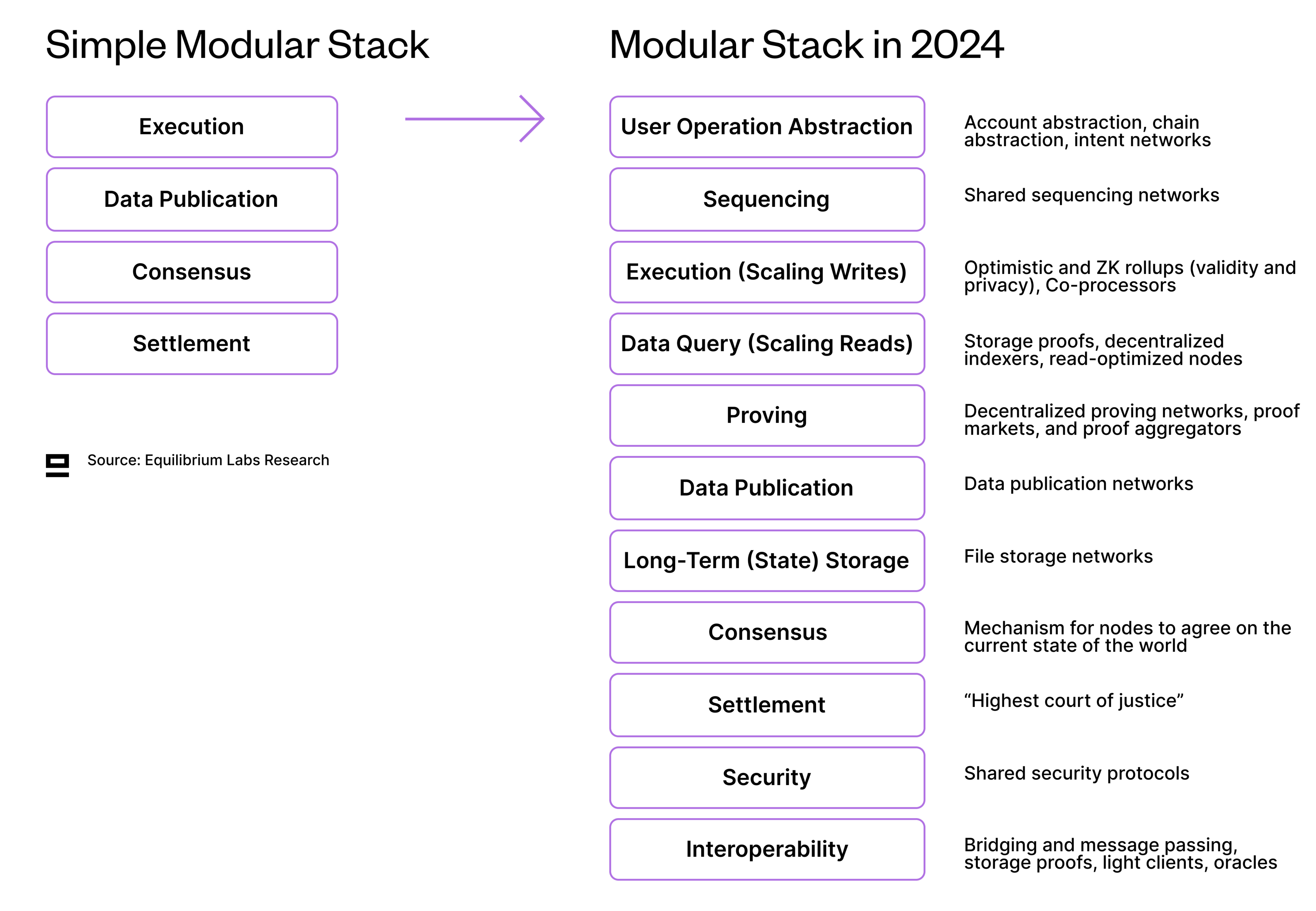

The often-used image of the modular stack with four components (execution, data publication, consensus, settlement) is useful as a simple mental model, but we don’t feel it’s an adequate representation anymore given how much the modular space has evolved. Further unbundling leads to new components that were previously thought of as part of a bigger piece, while also creating new dependencies and a need for safe interoperability between the different components (more about this later on). Given the pace at which the space is advancing, it can be hard to stay up-to-date on all the innovations at different levels of the stack.

Earlier attempts at exploring the web3 stack include the ones by Kyle Samani (Multicoin) - originally published in 2018 and updated in 2019. It covers everything from decentralized last-mile internet access (such as Helium) to end-user key management. While the principles behind it could be recycled, some pieces, like proving and verification, are completely missing.

With these in mind, we’ve attempted to create an updated representation of what the modular stack looks like in 2024, expanding on the existing four-part modular stack. It’s split by component rather than functionality, which means that P2P networking, for example, is included in consensus rather than splitting it out as a separate component - mainly because it’s difficult to build a protocol around it.

ZK In The Modular Stack

Now that we have an updated view of the modular stack, we can start looking at the real question, i.e. which parts of the stack ZK has already penetrated and which open problems could be solved by introducing ZK (either avoiding re-execution or privacy-features). Below is a summary of our findings, before going deeper into each component separately.

1 - User Operation Abstraction

Current users of blockchains need to navigate multiple chains, wallets, and interfaces, which is cumbersome and acts as friction for wider adoption. User operation abstraction is an umbrella term for any attempt to abstract away this complexity and allow users to interact with only one interface (for example a specific application or wallet), with all the complexity happening on the backend. Some examples of infra-level abstractions include:

Account abstraction (AA) enables smart contracts to make transactions without requiring user signatures for each operation (“programmable crypto account”). It can be used to define who can sign (key management), what to sign (tx payload), how to sign (signature algorithm), and when to sign (condition of transaction approval). Combined, these features enable things like using social login to interact with dApps, 2FA, account recovery, and automation (signing tx automatically). While the discussion often centers around Ethereum (ERC-4337 passed in the spring of 2023), many other chains already have built-in, native account abstraction (Aptos, Sui, Near, ICP, Starknet, and zkSync).

Chain Abstraction allows users to sign transactions on different chains while only interacting with one account (one interface, multiple chains). Several teams are working on this, including Near, ICP, and dWallet. These solutions leverage MPC and chain signatures, where the private key of the other network is split into several small pieces and shared across validators on the source chain who sign the cross-chain transactions. When users want to interact with another chain, a sufficient number of validators need to sign the transaction to satisfy the threshold encryption. This retains security, as the private key is never fully shared anywhere. It does, however, face the risk of validator collusion which is why the cryptoeconomic security and validator decentralization of the underlying chain is still highly relevant.

Intents, on a high level, enable bridging user wants and needs into operations that can be executed by a blockchain. This requires intent solvers - specialized off-chain agents tasked with finding the best possible solution to the user’s intent. There are already several apps that use specialized intents, such as DEX-aggregators (“best price”) and bridge aggregators (“cheapest/fastest bridging”). General intent-settlement networks (Anoma, Essential, Suave) aim to make it easier for users to express more complicated intents and for developers to build intent-centric applications. There are still many open questions, however, including how to formalize the process, what an intent-centric language would look like, whether an optimal solution always exists, and if it can be found.

Existing ZK Integrations

Authentication using AA x ZK: One example of this is Sui’s zkLogin, which enables users to log in using familiar credentials such as an email address. It uses ZKPs to prevent third parties from linking a Sui address with its corresponding OAuth identifier.

More efficient signature verification for AA wallets: Verifying transactions in AA contracts can be significantly more expensive than those initiated by a traditional account (EOA). Orbiter tries to tackle this with a bundler service that leverages ZKPs for verifying the correctness of transaction signatures and maintains the nonce value and gas balance of the AA account (through a Merkle world state tree). With the help of proof aggregation and sharing the on-chain verification cost equally amongst all users, this can lead to significant cost savings.

Open Problems That ZKPs Could Solve

Proof of best execution or intent fulfillment: While intents and AA can abstract away complexity from users, they can also act as a centralizing force and require us to rely on specialized actors (solvers) to find optimal execution paths. Rather than simply trusting the goodwill of the solver, ZKPs could potentially be used to prove that the optimal path for the user was chosen out of the ones sampled by the solver.

Privacy for intent settlement: Protocols like Taiga aim to enable fully shielded intent settlement to preserve the privacy of users - part of a broader move towards adding privacy (or at least confidentiality) to blockchain networks. It uses ZKPs (Halo2) to hide sensitive information about the state transitions (application types, parties involved, etc).

Password recovery for AA wallets: The idea behind this proposal is to enable users to recover their wallets if they lose their private keys. By storing a hash(password, nonce) on the contract wallet, users can generate a ZKP with the help of their password to verify that it’s their account and request a change of the private key. A confirmation period (3 days or more) serves as a safeguard against unauthorized access attempts.

2 - Sequencing

Transactions need to be ordered before being added to a block, which can be done in many ways: ordering by profitability to the proposer (highest paying transactions first), in the order they were submitted (first-in, first-out), giving priority to transactions from private mem pools, etc.

Another question is who gets to order the transactions. In a modular world, multiple different parties can do this including the rollup sequencer (centralized or decentralized), L1 sequencing (based rollups), and a shared sequencing network (decentralized network of sequencers used by multiple rollups). All of these have different trust assumptions and capacities to scale. In practice, the actual ordering of transactions and bundling them into a block can also be done out of the protocol by specialized actors (blockbuilders).

Existing ZK Integrations

Verifying correct encryption of mempool: Radius is a shared sequencing network that has an encrypted mempool with Practical Verifiable Delay Encryption (PVDE). Users generate a ZKP which is used to prove that solving the time-lock puzzles will lead to the correct decryption of valid transactions, i.e. that the transaction includes a valid signature and nonce and the sender has enough balance to pay the transaction fee.

Open Problems That ZKPs Could Solve

Verifiable Sequencing Rules (VSR): Subjecting the proposer/sequencer to a set of rules regarding execution ordering with additional guarantees that these rules are followed. Verification can be either through ZKPs or fraud proofs, of which the latter requires a large enough economic bond that is slashed if the proposer/sequencer misbehaves.

3 - Execution (Scaling Writes)

The execution layer contains the logic of how the state is updated and it’s where smart contracts are executed. In addition to returning an output of the computation, zkVMs also enable proving that state transitions were done correctly. This enables other network participants to verify correct execution by only verifying the proof, rather than having to re-execute transactions.

Besides faster and more efficient verification, another benefit of provable execution is enabling more complex computation, since you don’t run into the typical issues of gas and limited on-chain resources with off-chain computation. This opens the door to entirely new applications that are computationally more intense to run on blockchains and leverage guaranteed computation.

Existing ZK Integrations

zkEVM rollups: A special type of zkVM optimized to be compatible with Ethereum and prove the EVM-execution environment. The closer the Ethereum compatibility, however, the bigger the tradeoff in performance. Several zkEVMs launched in 2023, including Polygon zkEVM, zkSync Era, Scroll, and Linea. Polygon recently announced their type 1 zkEVM prover, which enables proving mainnet Ethereum blocks for $0.20-$0.50 per block (with upcoming optimizations to reduce costs further). RiscZero also has a solution that enables proving Ethereum blocks but at a higher cost with the limited benchmarking available.

Alternative zkVMs: Some protocols are taking an alternative path and optimizing for performance/provability (Starknet, Zorp) or developer friendliness, rather than trying to be maximally compatible with Ethereum. Examples of the latter include zkWASM protocols (Fluent, Delphinus Labs) and zkMOVE (M2 and zkmove).

Privacy-focused zkVMs: In this case, ZKPs are used for two things: avoiding re-execution and achieving privacy. While the privacy that can be achieved with ZKPs alone is limited (only personal private state), upcoming protocols add a lot of expressivity and programmability to existing solutions. Examples include Aleo’s snarkVM, Aztec's AVM, and Polygon’s MidenVM.

ZK-coprocessors: Enables off-chain compute on on-chain data (but without state). ZKPs are used to prove correct execution, giving faster settlement than optimistic co-processors, but there is a tradeoff in cost. Given the cost and/or difficulty of generating ZKPs, we are seeing some hybrid versions, such as Brevis coChain, which allows developers to choose between ZK or optimistic mode (tradeoff between cost and hardness of guarantees).

Open Problems That ZKPs Could Solve

Enshrined zkVM: Most base layers (L1s) still use re-execution to verify correct state transitions. Enshrining a zkVM to the base layer would avoid this, as validators could verify the proof instead. This would improve operational efficiency. Most eyes are on Ethereum with an enshrined zkEVM, but many other ecosystems also rely on re-execution.

zkSVM: While the SVM is mostly used within Solana L1 today, teams like Eclipse are trying to leverage the SVM for rollups that settle on Ethereum. Eclipse is also planning to use Risc Zero for ZK fraud proofs for potential challenges of state transitions in the SVM. A full-blown zkSVM, however, hasn’t yet been explored - probably due to the complexity of the problem and the fact that SVM is optimized for other things than provability.

4 - Data Query (Scaling Reads)

Data query, or reading data from the blockchain, is an essential part of most applications. While much of the discussion and efforts over recent years have focused on scaling writes (execution) - scaling reads is even more important due to the imbalance between the two (particularly in a decentralized setting). The ratio between read/write differs between blockchains, but one data point is Sig’s estimate that >96% of all calls to nodes on Solana were read-calls (based on 2 years of empirical data) - a read/write ratio of 24:1.

Scaling reads includes both getting more performance (more reads/second) with dedicated validator clients (such as Sig on Solana) and enabling more complex queries (combining reads with computation), for example with the help of co-processors.

Another angle is decentralizing the methods of data query. Today, most data query requests in blockchains are facilitated by trusted third parties (reputation-based), such as RPC nodes (Infura) and indexers (Dune). Examples of more decentralized options include The Graph and storage-proof operators (which are also verifiable). There are also several attempts of creating a decentralized RPC network, such as Infura DIN or Lava Network (besides decentralized RPC, Lava aims to offer additional data access services later).

Existing ZK Integrations

Storage proofs: Enables querying both historical and current data from blockchains without using trusted third parties. ZKPs are used for compression and to prove that the correct data was retrieved. Examples of projects building in this space include Axiom, Brevis, Herodotus, and Lagrange.

Open Problems That ZKPs Could Solve

Efficient Querying of Private State: Privacy projects often use a variation of the UTXO model, which enables better privacy features than the account model but comes at the expense of developer-friendliness. The private UTXO model can also lead to syncing issues - something Zcash has struggled with since 2022 after experiencing a significant increase in shielded transaction volume. Wallets must sync to the chain before being able to spend funds, so this is quite a fundamental challenge to the workings of the network. In anticipation of this problem, Aztec recently posted an RFP for ideas regarding note discovery but no clear solution has yet been found.

5 - Proving

With more and more applications incorporating ZKPs, proving and verification are quickly becoming an essential part of the modular stack. Today, however, most proving infrastructure is still permissioned and centralized with many applications relying on a single prover.

While the centralized solution is less complex, decentralizing the proving architecture and splitting it into a separate component in the modular stack brings several benefits. One key benefit comes in the form of liveness guarantees which are crucial for applications that depend on frequent proof generation. Users also benefit from higher censorship resistance and lower fees driven by competition and sharing the workload amongst multiple provers.

We believe general-purpose prover networks (many apps, many provers) are superior to single-application prover networks (one app, many provers) due to higher utilization of existing hardware and less complexity for provers. Higher utilization also benefits users in terms of lower fees, as provers don’t need to be compensated for the redundancy through higher fees (still need to cover fixed costs).

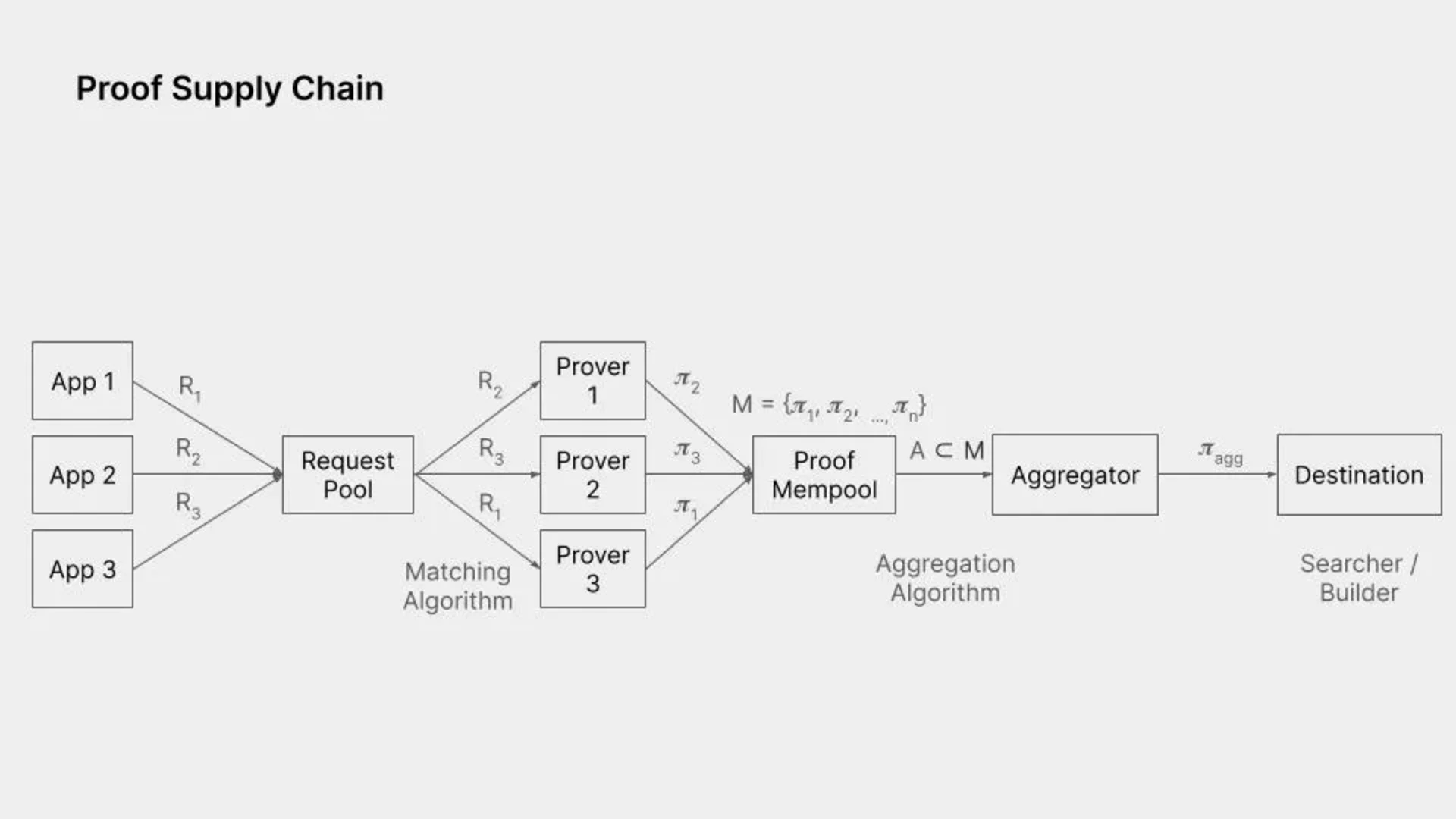

Figment Capital provided a good overview of the current state of the proof supply chain, which consists of both proof generation and proof aggregation (which in itself is proof generation, but just taking two proofs as input rather than execution trace).

Source: Figment Capital

Existing ZK Integrations

STARK with SNARK wrapper: STARK provers are fast and don’t require a trusted setup, but the downside is that they produce large proofs which are prohibitively expensive to verify on Ethereum L1. Wrapping the STARK in a SNARK as the final step makes it significantly cheaper to verify on Ethereum. On the downside, this adds complexity, and the security of these “compounded proof systems” hasn’t been studied in depth. Examples of existing implementations include Polygon zkEVM, Boojum in zkSync Era, and RISC Zero.

General-purpose decentralized proof networks: Integrating more applications into a decentralized proving network makes it more efficient for provers (higher utilization of hardware) and cheaper for users (don’t need to pay for the hardware redundancy). Projects in this field include Gevulot and Succinct.

Open Problems That ZKPs Could Solve

ZK Fraud Proofs: In optimistic solutions, anyone can challenge the state transition and create a fraud proof during the challenge period. However, verifying the fraud proof is still quite cumbersome as it’s done through re-execution. ZK fraud proofs aim to solve this issue by creating a proof of the state transition that’s being challenged, which enables more efficient verification (no need to re-execute) and potentially faster settlement. This is being worked on by at least Optimism (in collaboration with O1 Labs and RiscZero), and AltLayer x RiscZero.

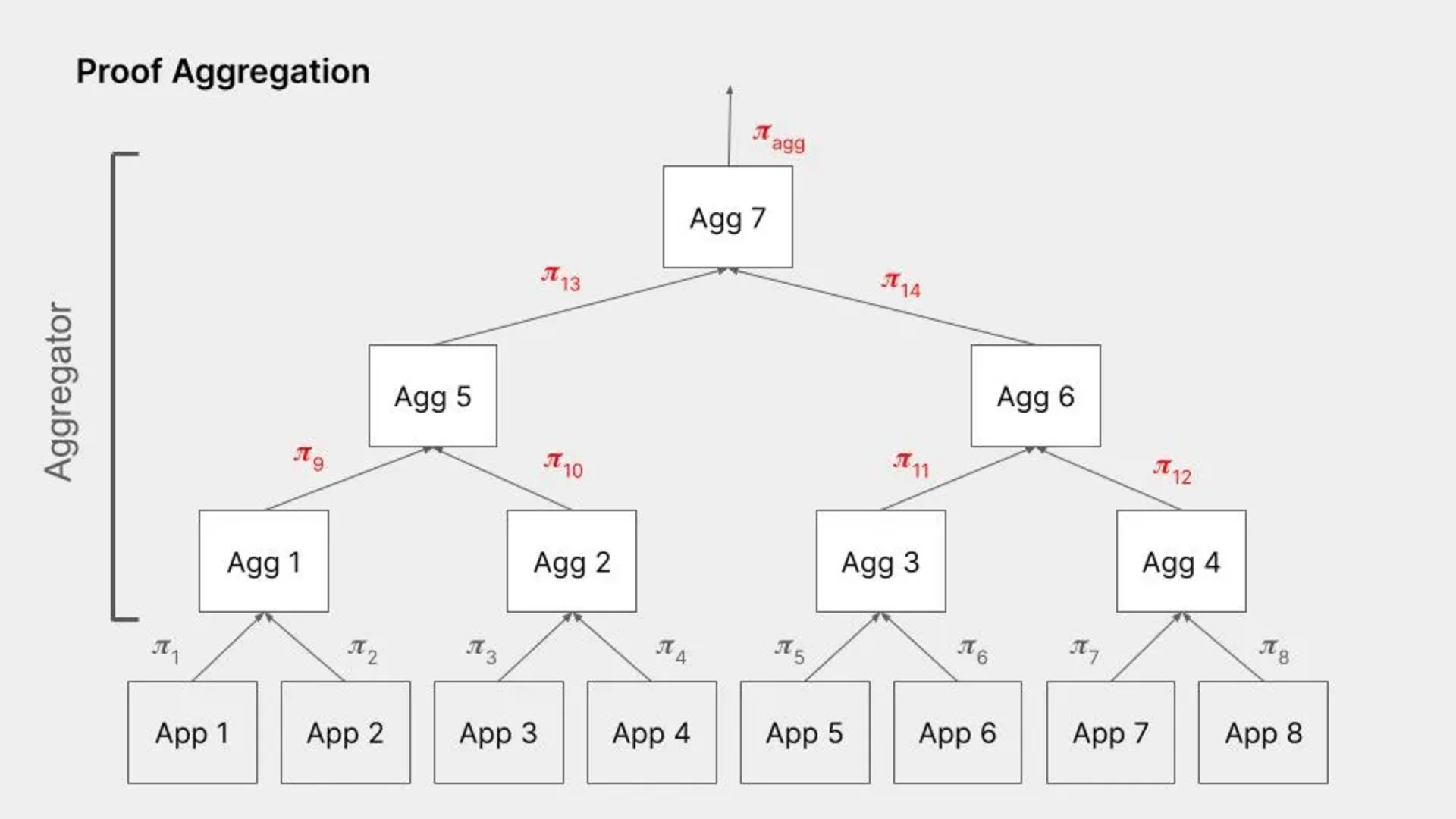

More efficient proof aggregation: A great feature of ZKPs is that you can aggregate several proofs into a single proof, without increasing verification costs significantly. This enables amortizing the cost of verification over multiple proofs or applications. Proof aggregation is also proving, but the input is two proofs instead of an execution trace. Examples of projects in this space include NEBRA and Gevulot.

Source: Figment Capital

6 - Data Publication (Availability)

Data publication (DP) ensures data is available and easily retrievable for a short period (1-2 weeks). This is crucial for both security (optimistic rollups require input data to verify correct execution by re-execution during the challenge period, 1-2 weeks) and liveness (even if a system uses validity proofs, underlying transaciton data might be needed to prove asset ownership for escape hatches, forced transactions or to verify that inputs match outputs). Users (such as zk-bridges and rollups) face a one-off payment, which covers the cost of storing transactions and state for a short period until it’s pruned. Data publication networks are not designed for long-term data storage (instead, see next section for possible solutions).

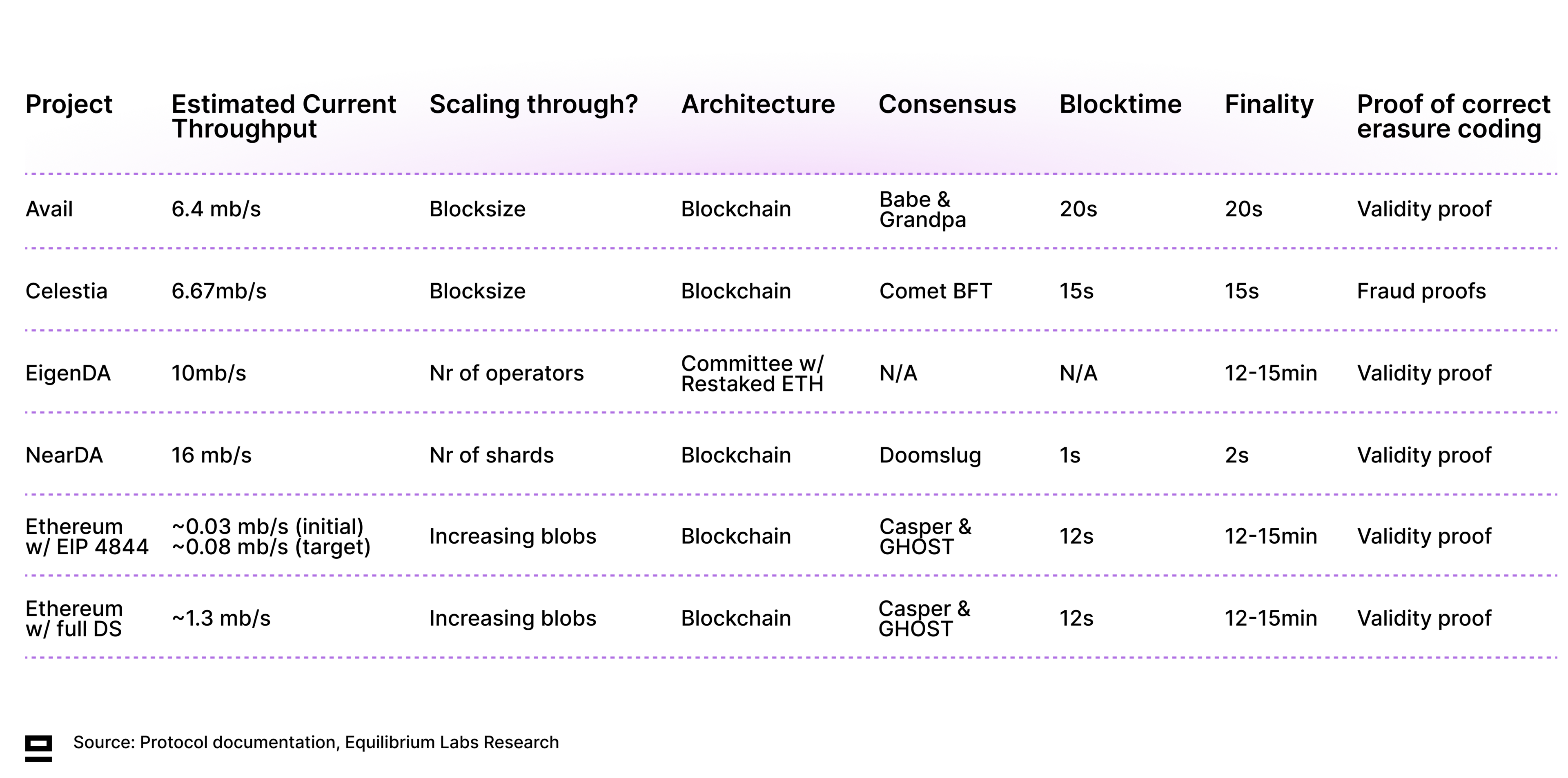

Celestia was the first alternative DP layer to launch its mainnet (Oct 31st), but there will soon be many alternatives to choose from as Avail, EigenDA, and Near DA are all expected to launch during 2024. In addition, Ethereum’s EIP 4844 upgrade scaled data publication on Ethereum (in addition to creating a separate fee market for blob storage) and set the stage for full dank-sharding. DP is also expanding to other ecosystems - one example being Nubit which aims to build Native DP on Bitcoin.

Many DP solutions also offer services beyond pure data publication, including shared security for sovereign rollups (such as Celestia and Avail) or smoother interoperability between rollups (such as Avail's Nexus). There are also projects (Domicon and Zero Gravity) that offer both data publication as well as long-term state storage, which is a compelling proposal. This is also an example of re-bundling two components in the modular stack, something we’ll likely see more of going forward (experimentation with both further unbundling and re-bundling).

Existing ZK Integrations

Proving correctness of erasure coding: Erasure coding brings a certain level of redundancy so that the original data is recoverable even if part of the encoded data is not available. It’s also a pre-requisite for DAS where light nodes sample only a small part of the block to probabilistically ensure data is there. If a malicious proposer encodes the data incorrectly, the original data might not be recoverable even if light nodes sample sufficient unique chunks. Proving correct erasure coding can be done either using validity proofs (ZKPs) or fraud proofs - the latter suffering from latency related to the challenge period. All other solutions except Celestia are working on using validity proofs.

ZK light clients powering data bridges: Rollups that use external data publication layers still need to communicate to the settlement layer that data has been published properly. This is what data attestation bridges are for. Using ZKPs makes verification of the source chain’s consensus signatures more efficient on Ethereum. Both Avail’s (VectorX) and Celestia’s (BlobstreamX) data attestation bridges are powered by ZK light clients built together with Succinct.

Open Problems That ZKPs Could Solve

Celestia incorporating validity proofs for correct erasure coding: Celestia is currently an outlier amongst the data publication networks as it uses fraud proofs for correct erasure coding. If a malicious block proposer encodes the data incorrectly, any other full node can generate a fraud proof and challenge this. While this approach is somewhat simpler to implement, it also introduces latency (the block is only final after the fraud proof window) and requires light nodes to trust one honest full node to generate the fraud proof (can’t verify it themselves). However, Celestia is exploring combining their current Reed-Solomon encoding with a ZKP to prove correct encoding, which would reduce finality significantly. The latest discussion around this topic can be found here with recordings from previous working groups (in addition to more general attempts of adding ZKPs to the Celestia base layer).

ZK-proving DAS: There have been some explorations on ZK-proving data availability, where light nodes would simply verify the merkle root and a ZKP, rather than having to do the usual sampling by downloading small chunks of data. This would reduce the requirements for light nodes even further, but it seems that development has stalled.

7 - Long-Term (State) Storage

Storing historical data is important mainly for syncing purposes and serving data requests. However, it’s not feasible for every full node to store all data and most full nodes prune old data to keep hardware requirements reasonable. Instead, we rely on specialized parties (archive nodes and indexers), to store all historical data and make it available at the request of users.

There are also decentralized storage providers, such as Filecoin or Arweave, that offer long-term decentralized storage solutions at a reasonable price. While most blockchains don’t have a formal process of archive storage (simply rely on someone storing it), decentralized storage protocols are good candidates for storing historical and adding some redundancy (at least X nodes store the data) through the storage network’s built-in incentives.

Existing ZK Integrations

Proof of storage: Long-term storage providers need to generate ZKPs regularly to prove that they have stored all the data they claim. An example of this is Filecoin’s proof of spacetime (PoSt), where storage providers earn block rewards each time they successfully answer a PoSt challenge.

Open Problems That ZKPs Could Solve

Proving data provenance and authorization to view sensitive data: With two untrusted parties that want to exchange sensitive data, ZKPs could be used to prove that one party has the required credentials to view the data without having to upload actual documents or disclosing password and log-in details.

8 - Consensus

Given that blockchains are distributed P2P systems, there is no trusted third party that determines the global truth. Instead, nodes of the network agree on what the current truth is (which block is the right one) through a mechanism called consensus. PoS-based consensus methods could be categorized to either BFT-based (where the Byzantine fault-tolerant quorum of validators decides the final state) or chain-based (where the final state is decided retrospectively by the fork choice rule). While most existing PoS-consensus implementations are BFT-based, Cardano is an example of a longest-chain implementation. There is also a growing interest in DAG-based consensus mechanisms such as Narwhal-Bullshark that’s implemented in some variations across Aleo, Aptos, and Sui.

Consensus is a crucial part of many different components of the modular stack including shared sequencer, decentralized proving, and blockchain-based data publication networks (not committee-based such as EigenDA).

Existing ZK Integrations

Staking in ZK-based privacy networks: PoS-based privacy networks pose a challenge, as holders of the staking tokens must choose between privacy and participating in consensus (and receiving staking rewards). Penumbra aims to solve this by eliminating staking rewards, instead treating unbonded and bonded stakes as separate assets. This method keeps individual delegations private, while the total amount bonded to each validator is still public.

Private governance: Achieving anonymous voting has long been a challenge in crypto, with projects such as Nouns Private Voting trying to push this forward. The same also applies to governance, where anonymous voting on proposals is being worked on by at least Penumbra. In this instance, ZKPs can be used to prove that one has the right to vote (for example through token ownership) and that certain voting criteria are fulfilled (hasn’t already voted, for example).

Open Problems That ZKPs Could Solve

Private leader election: Ethereum currently elects the next 32 block proposers at the beginning of each epoch and the results of this election are public. This imposes the risk of a malicious party launching a DoS attack against each proposer sequentially to try to disable Ethereum. In an attempt to tackle this problem, Whisk is a proposal for a privacy-preserving protocol for electing block proposers on Ethereum. ZKPs are used by validators to prove that the shuffling and randomization were performed honestly. There are other approaches as well to achieve a similar end goal, some of which are covered in this blog post by a16z.

Signature Aggregation: Using ZKPs for aggregating signatures could significantly reduce the communication and computation overhead of signature verification (verify one aggregated proof rather than each individual signature). This is something that’s already leveraged in ZK light clients but could potentially be expanded to consensus as well.

9 - Settlement

Settlement is akin to the highest court of justice - the final source of truth where the correctness of state transitions is verified and disputes are settled. A transaction is considered final at the point when it is irreversible (or in the case of probabilistic finality - at the point where it would be sufficiently hard to reverse it). The time to finality depends on the underlying settlement layer used, which in turn depends on the specific finality rule used and block time.

Slow finality is particularly a problem in cross-rollup communication, where rollups need to wait for confirmation from Ethereum before being able to approve a transaction (7 days for optimistic rollups, 12mins and proving time for validity rollups). This leads to poor user experience. There are multiple efforts to solve this issue using pre-confirmations with a certain level of security. Examples include both ecosystem-specific solutions (Polygon AggLayer or zkSync HyperBridge) and general-purpose solutions such as Near’s Fast Finality Layer that aims to connect multiple different rollup ecosystems by leveraging economic security from EigenLayer. There is also the option of native rollup bridges leveraging EigenLayer for soft confirmations to avoid waiting for full finality.

Existing ZK Integrations

Faster settlement with validity rollups: In contrast to optimistic rollups, validity rollups don’t require a challenge period as they instead rely on ZKPs to prove correct state transition whether or not anyone challenges (pessimistic rollups). This makes settlement on the base layer much faster (12 mins vs 7 days on Ethereum) and avoids re-execution.

10 - Security

Security is related to the hardness of guarantees and a crucial part of the value proposition of blockchains. However, bootstrapping cryptoeconomic security is hard - increasing barriers to entry and acting as friction for innovation to those applications that need it (various middleware and alternative L1s).

The idea of shared security is to use existing economic security from PoS networks and subject it to additional slashing risk (conditions for punishment), rather than each component trying to bootstrap its own. There have been some earlier attempts to do the same in PoW networks (merged mining), but misaligned incentives made it easier for miners to collude and exploit a protocol (harder to punish bad behavior as the work happens in the physical world, i.e. mining using computing power). PoS security is more flexible to be used by other protocols as it has both positive (staking yield) and negative (slashing) incentives.

Protocols building around the premise of shared security include:

EigenLayer is aiming to leverage existing Ethereum security for securing a wide range of applications. The whitepaper was released in early 2023, and EigenLayer is currently in mainnet alpha, with full mainnet expected to launch later this year.

Cosmos launched its Interchain Security (ICS) in May 2023, which enables the Cosmos Hub - one of the largest chains on Cosmos and backed by ~$2.4bn of staked ATOM - to lease its security to consumer chains. By using the same validator set that powers the Cosmos Hub to also validate blocks on the consumer chain, it aims to reduce the barrier to launching new chains on top of the Cosmos stack. Currently, however, only two consumer chains are live (Neutron and Stride).

Babylon is trying to enable BTC to be used for shared security as well. To counter the problems related to merged mining (hard to punish bad behavior), it’s building a virtual PoS layer where users can lock BTC into a staking contract on Bitcoin (no bridging). Since Bitcoin doesn’t have a smart contract layer, slashing rules of staking contracts are instead expressed in terms of UTXO transactions written in the Bitcoin script.

Restaking on other networks include Octopus on Near and Picasso on Solana. Polkadot Parachains also leverages the concept of shared security.

Existing ZK Integrations

Mix between ZK and economic security: While ZK-based security guarantees might be stronger - proving is still prohibitively expensive for some applications and generating the proof takes too long. One example of this is Brevis coChain, which is a co-processor that gets its economic security from ETH re-stakers and guarantees computation optimistically (with ZK fraud proofs). dApps can choose between pure-ZK or coChain mode, depending on their specific needs regarding security and cost tradeoffs.

11 - Interoperability

Safe and efficient interoperability remains a big issue in a multi-chain world, exemplified by the $2.8bn lost in bridge hacks. In modular systems, interoperability becomes even more important - Not only are you communicating between other chains, but modular blockchains also require different components to communicate with each other (such as DA and settlement layer). Hence, it is no longer feasible to simply run a full node or verify a single consensus proof as in integrated blockchains. This adds more moving pieces to the equation.

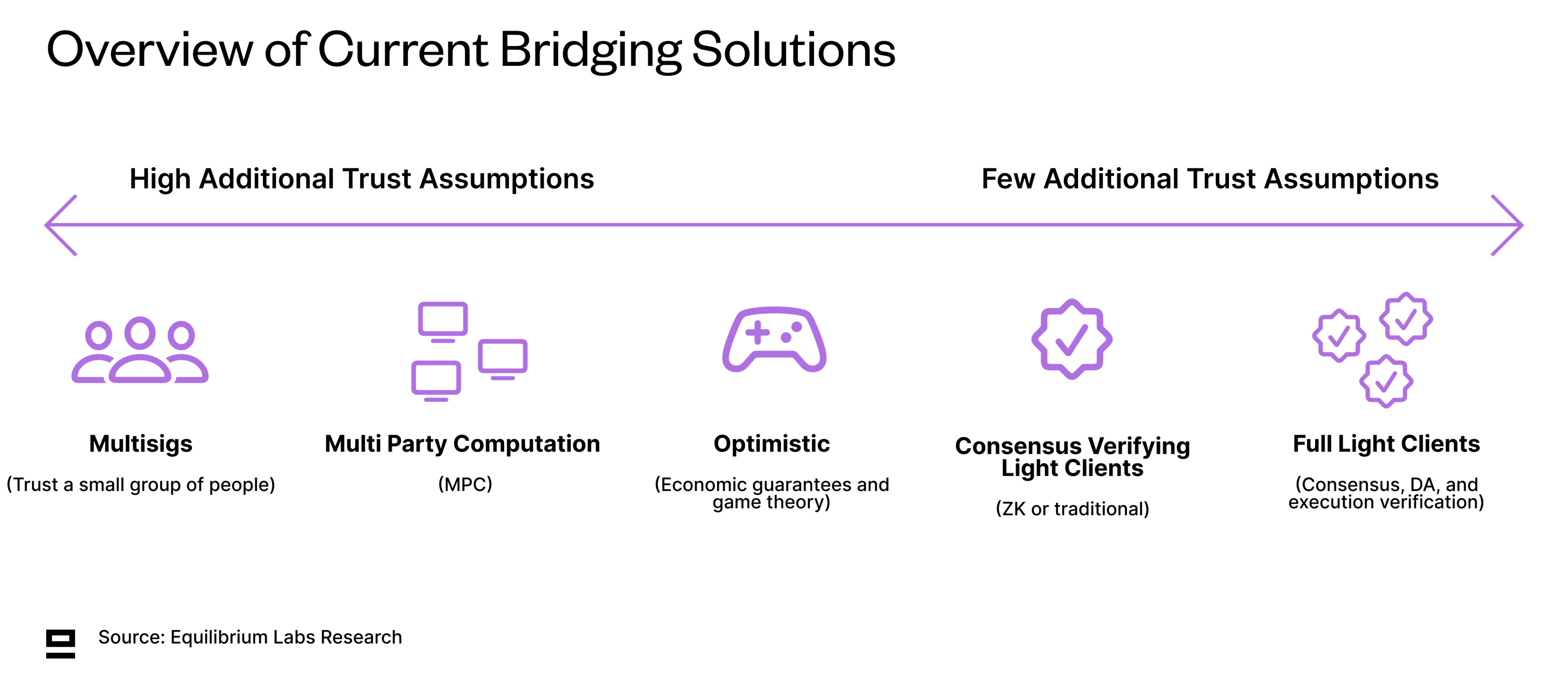

Interoperability includes both token-bridging as well as more general message-passing across blockchains. There are several different options out there that all make different tradeoffs about safety, latency, and cost. Optimizing for all three is very hard, which usually requires sacrificing at least one. In addition, different standards across chains make implementations on new chains more difficult.

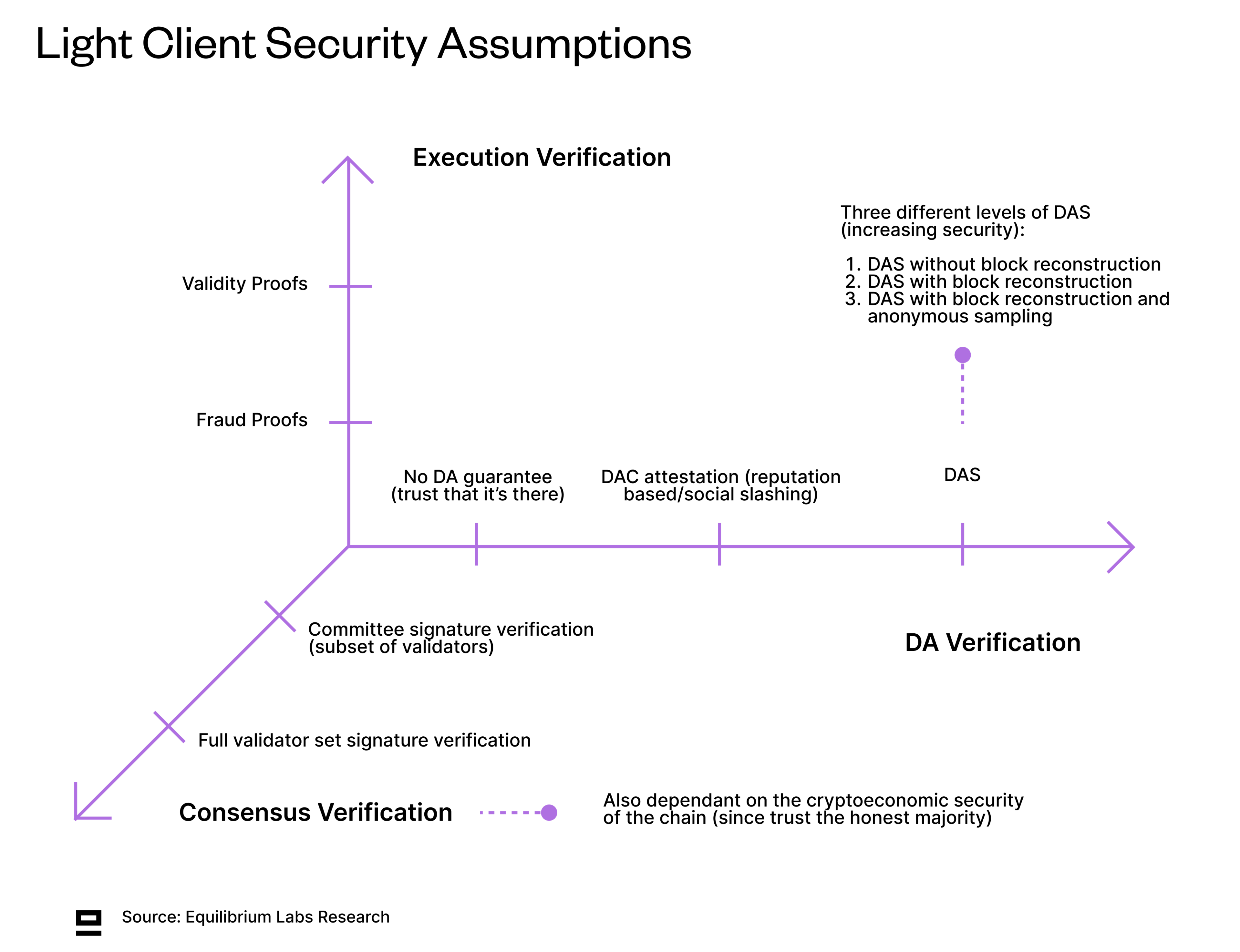

While we still lack a clear definition of the different types of light clients (or nodes), this post by Dino (co-founder of Fluent & Modular Media) gives a good introduction. Most light clients today only verify consensus, but ideally we would have light clients that can verify execution and DA as well to reduce trust assumptions. This would allow getting close to full-node security, without the high hardware requirements.

Existing ZK Integrations

ZK light clients (consensus verification): Most current light clients enable verifying consensus of the other chain - either the full validator set (if small enough) or a subset of the total validators (such as Ethereum’s sync committee). ZKPs are used to make verification quicker and cheaper since the signature scheme used on the origin chain might not be natively supported on the destination chain. While the importance of ZK light clients in bridging is expected to increase, current frictions for wider adoption include the cost of proving and verification along with the implementation of ZK light clients for each new chain. Examples of protocols in this space include Polyhedra, Avail’s and Celestia’s data attestation bridges, and zkIBC by Electron Labs.

Storage proofs: As mentioned before, storage proofs enable querying both historical and current data from blockchains without using trusted third parties. This is also relevant for interoperability as they could be used for cross-chain communication. For example, a user could prove they have tokens on one chain and use that for governance on another chain (without needing to bridge). There are also attempts to use storage proofs for bridging, such as this solution developed by LambdaClass.

ZK Oracles: Oracles act as intermediaries and bridge real-world data to the blockchain. ZK oracles improve on current reputation-based oracle models by enabling proving the origin and integrity of the data, along with any computation made on that data.

Open Problems That ZKPs Could Solve

Full light clients: Rather than blindly trusting the other chain’s validator set - full light clients also verify correct execution and DA. This reduces trust assumptions and gets closer to a full node, while still keeping hardware requirements low (allowing more people to run light clients). However, verifying anything else than consensus is still prohibitively expensive on most chains, especially Ethereum. In addition, light clients only enable verification of information (half of the problem), i.e. they can identify that information is false, but there still needs to be an additional mechanism for them to do something about it.

Aggregation Layers: Polygon’s AggLayer aims to enable smooth interoperability between L2s within the ecosystem by leveraging aggregated proofs and a unified bridge contract. The aggregated proof enables both more efficient verification and security - enforcing that dependent chain states and bundles are consistent and ensuring that a rollup state can't be settled on Ethereum if it relies on an invalid state from another chain. zkSync’s HyperChains and Avail Nexus are taking a similar approach.

When Has ZK Eaten The Modular Stack?

Assuming that we can reach a state where the generation of ZKPs becomes very fast (almost at the speed of light) and incredibly cheap (almost free), what does the end game look like? In other words - when has ZK eaten the modular stack?

Broadly speaking, we believe two things would be true in this state of the world:

All unnecessary re-execution is eliminated: By moving to a 1/N model of execution (rather than N/N with re-execution), we significantly reduce the overall redundancy of the network and enable more efficient use of the underlying hardware. While some overhead remains, this would help blockchains get asymptotically closer to centralized systems in terms of computational efficiency.

Most applications rely on ZK-enabled cryptographic guarantees rather than economic security: When the cost and time to generate proofs is no longer a relevant consideration, we believe most applications will rely on ZKPs for stronger guarantees. This also requires some improvements in useability and developer friendliness to build ZK applications, but these are all problems that multiple teams are working on.

A third condition would be around privacy (or information flow management), but it's more complicated. ZKPs can be used for some privacy applications with client-side proving, which is what platforms like Aleo, Aztec or Polygon Miden is building for, but achieving wide-scale privacy for all potential use cases relies on the progress of MPC and FHE as well - a potential topic for a future blog post.

Risks To Our Thesis

What if we’re wrong, and the future is neither modular nor ZK’fied? Some potential risks to our thesis include:

Modularity increases complexity

Both users and developers suffer from the ever-growing number of chains. Users need to manage funds across multiple chains (and potentially multiple wallets). Application developers, on the other hand, have less stability and forecastability given how much the space is still evolving, making it harder to decide on which chain to build. They also need to think about state and liquidity fragmentation. This is particularly true now as we are still experimenting along the frontier of which components make sense to decouple and which will be recoupled. We believe user operation abstraction as well as safe and efficient interoperability solutions are crucial parts to solve this problem.

Will ZK ever be performant enough?

There is no getting around the fact that proof generation takes too long and the cost of both proving and verification is still far too high today. Competing solutions such as trusted execution environments/TEEs (privacy) or optimistic/cryptoeconomic security solutions (cost) still make more sense for many applications today.

A lot of work, however, is being done regarding software optimization and hardware acceleration for ZKPs. Proof aggregation will help reduce verification costs further by spreading the cost over multiple different parties (lower cost/user). There is also the possibility of adapting the base layer to be more optimized for the verification of ZKPs. One challenge regarding hardware acceleration for ZKPs is the rapid development of proving systems. This makes it hard to create specialised hardware (ASICs) as they risk becoming obsolete quickly if/when the standards of underlying proving systems evolve.

Ingonyama has attempted to create some benchmarking for prover performance through a comparable metric called ZK score. It’s based on the cost of running computation (OPEX) and tracks MMOPS/WATT, where MMOPS stands for modular multiplication operations per second. For further reading on the topic, we recommend blogs by Cysic and Ingonyama, as well as this talk by Wei Dai.

Is the limited privacy that ZKPs can provide useful?

ZKPs can only be used to achieve privacy for personal state, not shared state where multiple parties need to compute on encrypted data (such as a private Uniswap). FHE and MPC are also needed for full privacy, but these need to improve many orders of magnitude in terms of cost and performance before being viable options for wider-scale usage. That said, ZKPs are still useful for certain use cases that don’t require a private shared state, such as identity solutions or payments. Not all problems need to be solved with the same tool.

Summary

So where does this leave us? While we are making progress each day, lots of work remains. The most pressing issues to figure out are how value and information can flow safely between different modular components without sacrificing speed or cost, as well as abstracting it all away from the end-consumer so that they don't need to be concerned with bridging between different chains, switching wallets, etc.

While we are currently still very much in the experimentation phase, things should stabilize over time as we figure out where on the spectrum the optimal tradeoffs are for each use-case. That in turn will give room for standards (informal or formal) to emerge and give more stability to builders on top of these chains.

Today there are still many use cases that default to cryptoeconomic security due to the cost and complexity of generating ZKPs, and some that require a combination of the two. However, this share should decrease over time as we design more efficient proving systems and specialized hardware to bring down the cost and latency of proving & verification. With each exponential reduction in cost and speed, new use cases are unlocked.

While this piece focused on ZKPs specifically, we are also increasingly interested in how modern cryptography solutions (ZKPs, MPC, FHE, and TEE) will end up playing together - something we are already seeing.

Thanks for reading!

Continue reading

May 28, 2025

State of Verifiable Inference & Future Directions

Verifiable inference enables proving the correct model and weights were used, and that inputs/outputs were not tampered with. This post covers different approaches to achieve verifiable inference, teams working on this problem, and future directions.

March 25, 2025

Introducing Our Entrepreneur in Residence (EIR) Program

After 6+ years of building core blockchain infrastructure across most ecosystems and incubating ventures like ZkCloud, we're looking for ambitious pre-founders with whom to collaborate closely.

March 10, 2025

From Speculation to Utility: Next Steps For Onchain Lending Markets

Despite its promises, onchain lending still mostly caters to crypto-natives and provides little utility besides speculation. This post explores a path to gradually move to more productive use cases, low-hanging fruit, and challenges we might face.

February 18, 2025

Can Blockchains And Cryptography Solve The Authenticity Challenge?

As gen-AI models improve, it's becoming increasingly difficult to differentiate between AI- and human-generated content. This piece dives into whether cryptography and blockchains can solve the authenticity challenge and help restore trust on the Internet

February 6, 2025

Vertical Integration for both Ethereum and ETH the Asset

In recent months, lackadaisical price action and usage growing on other L1/L2s has driven a discussion on what Ethereum’s role and the value of ETH, the asset is long-term.

January 29, 2025

Equilibrium: Building and Funding Core Infrastructure For The Decentralized Web

Combining Labs (our R&D studio) and Ventures (our early-stage venture fund) under one unified brand, Equilibrium, enables us to provide more comprehensive support to early-stage builders and double down on our core mission of building the decentralized web

November 28, 2024

20 Predictions For 2025

For the first time, we are publishing our annual predictions for what will happen by the end of next year and where the industry is headed. Joint work between the two arms of Equilibrium - Labs and Ventures.

November 7, 2024

9 + 1 Open Problems In The Privacy Space

In the third (and final) part of our privacy series, we explore nine open engineering problems in the blockchain privacy space in addition to touching on the social/regulatory challenges.

October 15, 2024

Aleo Mainnet Launch: Reflecting On The Journey So Far, Our Contributions And Path Ahead

Equilibrium started working with Aleo back in 2020 when ZKPs were still mostly a theoretical concept and programmable privacy in blockchains was in its infancy. Following Aleo's mainnet launch, we reflect on our journey and highlight key contributions.

August 12, 2024

Do All Roads Lead To MPC? Exploring The End-Game For Privacy Infrastructure

This post argues that the end-game for privacy infra falls back to the trust assumptions of MPC, if we want to avoid single points of failure. We explore the maturity of MPC & its trust assumptions, highlight alternative approaches, and compare tradeoffs.

June 12, 2024

What Do We Actually Mean When We Talk About Privacy In Blockchain Networks (And Why Is It Hard To Achieve)?

An attempt to define what we mean by privacy, exploring how and why privacy in blockchain networks differs from web2, and why it's more difficult to achieve. We also provide a framework to evaluate different approaches for achieveing privacy in blockchain.

October 5, 2023

Overview of Privacy Blockchains & Deep Dive Of Aleo

Programmable privacy in blockchains is an emergent theme. This post covers what privacy in blockchains entail, why most blockchains today are still transparent and more. We also provide a deepdive into Aleo - one of the pioneers of programmable privacy!

March 12, 2023

2022 Year In Review

If you’re reading this, you already know that 2022 was a tumultuous year for the blockchain industry, and we see little value in rehashing it. But you probably also agree with us that despite many challenges, there’s been a tremendous amount of progress.

May 31, 2022

Testing the Zcash Network

In early March of 2021, a small team from Equilibrium Labs applied for a grant to build a network test suite for Zcash nodes we named Ziggurat.

June 30, 2021

Connecting Rust and IPFS

A Rust implementation of the InterPlanetary FileSystem for high performance or resource constrained environments. Includes a blockstore, a libp2p integration which includes DHT contentdiscovery and pubsub support, and HTTP API bindings.

June 13, 2021

Rebranding Equilibrium

A look back at how we put together the Equilibrium 2.0 brand over four months in 2021 and found ourselves in brutalist digital zen gardens.

January 20, 2021

2021 Year In Review

It's been quite a year in the blockchain sphere. It's also been quite a year for Equilibrium and I thought I'd recap everything that has happened in the company with a "Year In Review" post.